A Louisiana sheriff's office recently agreed to pay $200,000 to a Georgia resident who was wrongly jailed in 2022.

Facial recognition technology misidentified the man—who had never been to Louisiana—as a suspect in a high-value theft case involving a local consignment shop.

This case raises serious questions about the risks of overrelying on biometric surveillance in law enforcement. But it’s not just a public sector issue. The same flaws can—and often do—show up in business identity verification flows.

Why does this happen, and what should companies do about it?

Let’s break it down.

How biometric verification works

When people hear “facial recognition,” they often picture different things. That’s because key terms in identity verification are commonly mixed up. To stay aligned, let’s clarify the basics.

Biometric verification is a broad term. It means confirming someone’s identity using their unique biological features—like their face, iris, or fingerprints.

Among these, facial biometrics are the most widely used. They’re convenient for customers and easy to process compared to other types. For example, while iris scans require expensive optics and fingerprints need dedicated sensors, most smartphones already have cameras good enough for face-based checks.

Biometric verification using facial data typically includes the following tasks:

Face detection—Locates a face in a photo or video. This is the foundation of facial verification.

Face recognition—Identifies a person by comparing their face against a database of known faces.

Face matching—Confirms if someone is who they claim to be by comparing their face to a known reference (e.g., from an ID).

Liveness detection—Verifies that the face belongs to a real person, and is not a deepfake, photo, screen image, or other artifact used during presentation attacks. It uses visual cues like texture, motion, or facial response to detect fraud.

These checks can be used in different combinations. A sports venue can recognize ticket holders by face at the gate. A bank may match a selfie with an ID photo to onboard a new customer or confirm a transaction by an instant face scan.

Regardless of the use case, facial verification systems always perform two critical tasks: finding a face and then comparing it with a reliable reference. Let’s take a closer look at the process:

Face detection is always one of the first steps. But detecting a face alone doesn’t confirm who the person is—or if it’s even a real person. That’s why liveness checks are essential, whether passive (analyzing image features) or active (asking the user to move, smile, etc.).

Once a face is detected and confirmed as live, the system either identifies or authenticates the person. Here’s the difference:

Face recognition asks: “Who are you?” It works with unknown individuals and searches for a match in large databases, preventing concealer attacks in which a person tries to hide their real identity. Law enforcement uses it to detect missing or wanted persons.

Face matching asks: “Are you really who you claim to be?” It works with known individuals and verifies identity using ID documents or previously submitted photos—often used by businesses for login flows. Face matching helps prevent impostor attacks in which an individual pretends to be someone else.

| Task | Face recognition—Prevents concealer attacks | Face matching—Prevents impostor attacks |

|---|---|---|

| Comparison type | One-to-many (1:N) | One-to-one (1:1) |

| Context | “I don’t know you” | “I know you—but prove it” |

| Application |

|

|

These tasks are powered by neural networks. Today, many people associate them with large AI-based models, which raises privacy and compliance concerns. But IDV providers like Regula don’t use commercial tools for AI-powered identity verification. Instead, they rely on highly specialized, secure networks trained for narrow, mission-specific tasks in a closed, controlled environment.

Think of such a neural network as a dedicated machine in a robotic factory. Each one has its own "specialization" and is trained to perform particular tasks. This means the same neural network can’t perform both liveness detection and comparison. Each model is used for a particular practical task, for instance:

Segment: Marks a face with a rectangular area and defines its landmarks.

Classify: Detects whether a face is male or female.

Regress: Estimates the age of a person in the picture, etc.

Get posts like this in your inbox with the bi-weekly Regula Blog Digest!

Do neural networks make mistakes?

Here’s a cognitive bias: people easily accept that other people—for instance, human inspectors—can make a mistake. However, when it comes to computer systems, they tend to believe them without question.

The emergence and development of neural networks (NNs) has made this belief stronger. But NNs simulate nothing more than a human brain. For this reason, training NNs is similar to teaching children. For facial verification purposes, they learn to recognize different objects by showing them many pictures.

This process can be illustrated through a simple analogy. Imagine a movie theater where the images on the screen can be seen only by spectators in the first row. Then, they describe what they see to people in the second row, and so on. However, the last row decides what exactly is seen.

In real life, there are many rules determining how the initial data transfers, how final conclusions are made, and how “the first row” receives feedback. However, the deep levels of NNs—similar to unconscious parts of the brain—typically can’t be accessed or adjusted manually.

Nevertheless, NNs are trained and tested under developers’ control. Typically, two different datasets of images are used—training and test—that can’t be mixed, in order to avoid inconsistent results or poor metrics. Usually, this is a long cycle of “training-test” prompts, during which NNs process both new and old face samples to improve their results in detection, recognition, matching, and other tasks. Additionally, global laboratories like the National Institute of Standards and Technology (NIST) run independent tests for facial verification systems, revealing benchmarks for all sorts of tasks.

However, the strictest test is a practical application in a real-world use case, where NNs must handle a unique set of real faces. This is why it’s critical to properly test identity verification solutions before large-scale implementation.

Back to the question this section begins with: yes, NNs can make mistakes. Now, let’s see exactly what causes failures in facial verification.

3 main reasons why biometric checks fail

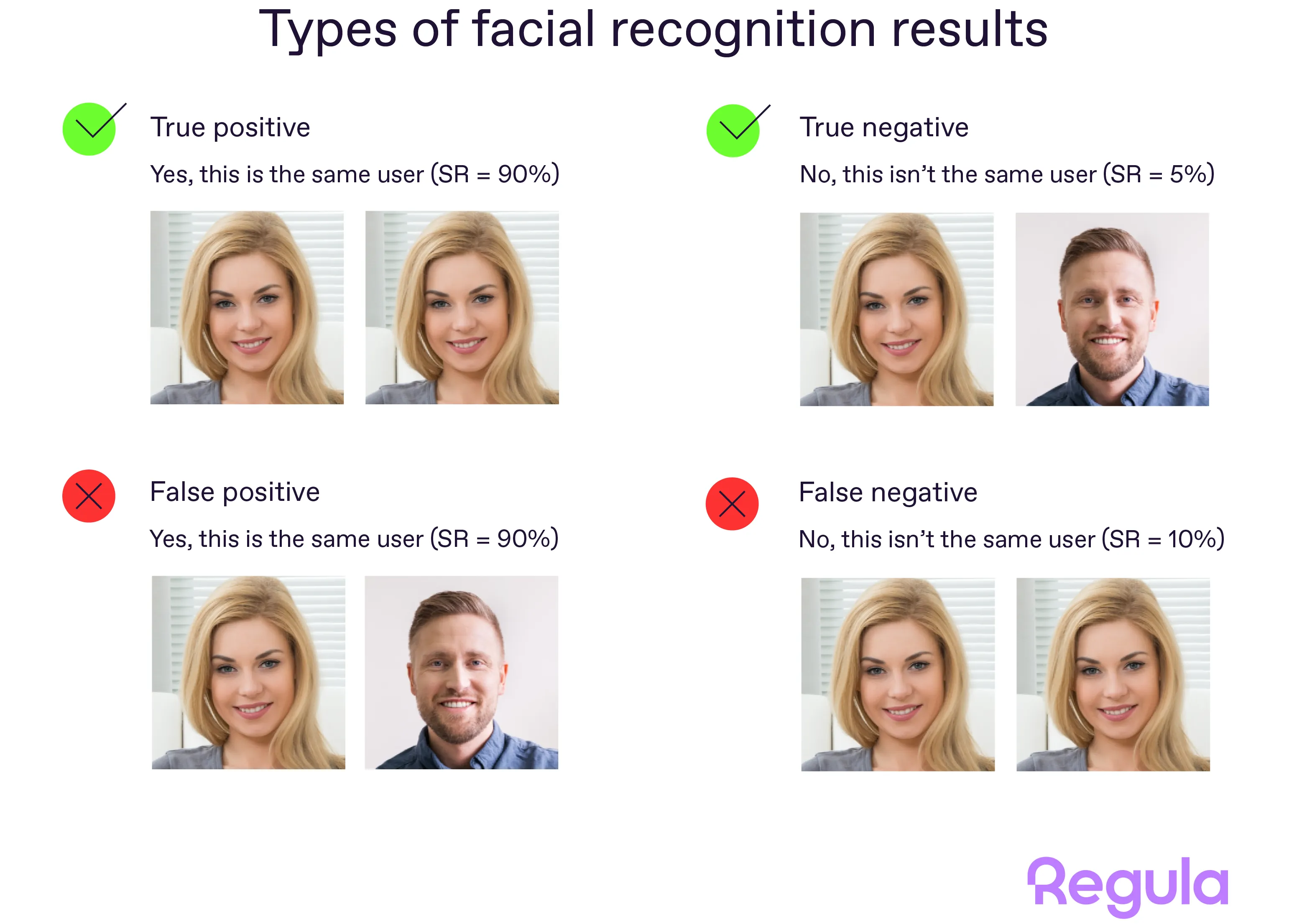

First, what is a mistake in biometric verification? To understand this, it’s better to begin with the possible results that facial verification systems can produce. There are only four options:

True positive

False positive

True negative

False negative

It will be more illustrative to examine these results using a real example. Take facial recognition, where the system needs to identify an unknown user by comparing their photo to a database of confirmed identities. To evaluate any result, a versatile metric is used—the similarity rate (SR). So, the final result might be: X is similar to Y at 87%.

Now, take a look at the options we have:

Again, there are two general types of mistakes. Why do these false positives and negatives happen?

1. Dataset inconsistencies

This part is usually out of the control of IDV vendors’ clients. However, it’s critical to find out the main characteristics of the datasets used by the developers to train their NNs.

These include:

Size of the datasets (this should align with your workflow).

Sample demographics (country, age, gender, etc.).

Image characteristics (mobile camera shots, images taken in a controlled environment, AI-generated selfies, etc.).

If there are more similarities than differences with your particular use case, chances are the facial verification system will perform effectively in real life.

2. Similarity rate settings

It’s important for the facial verification system to be adjusted to the business case. Each biometric solution has a specific threshold that determines at what degree of similarity the compared images are classified as matches or non-matches. In plain English, is 90% or 85% similarity enough to be accepted as a true positive?

While it’s impossible to achieve 100% accuracy in any system, this variable always depends on the context. Different businesses may consider different similarity rates acceptable. Additionally, changing the similarity rate often affects business metrics, such as the churn rate. For example, if you demand that users take ultra high-quality selfies, they may abandon the process.

The optimal balance between security and user convenience can be found during trial tests when you evaluate the performance of a biometric system for your business. So be prepared to adjust thresholds and settings multiple times.

3. Poor user experience (UX)

When it comes to selfie verification, image quality plays a critical role. The workflow may involve a human inspector who takes a selfie with proper lighting and focus—at a border checkpoint, in a physical office, or even during a video call.

However, in many cases, users take selfies on their own. In this case, the absence of prompts and instructions may lead to retakes or even false positives or negatives. Therefore, to perform better, the facial verification system should be customer-centric and accessible to different groups of users.

How to avoid mistakes while keeping fraudsters out

Wrapping up, here are the key takeaways:

Computers can (and do!) make mistakes. For example, trust in AI-powered face recognition is one of the main reasons for wrongful detentions by law enforcement. This means each false result should be regarded not as a source of truth, but as an expert opinion that may still be wrong. It’s also reasonable to have a backup plan for such cases. For instance, matchgoers can be authenticated by their IDs if the fast lane with facial recognition doesn’t work. In remote scenarios, users can be invited to join a call with a human inspector.

NNs train on a variety of datasets, which may be inconsistent with what you have in your company. Dataset characteristics affect facial verification results the most. So, you need to ensure that an IDV vendor provides reliable results based on images consistent with selfies you verify every day.

Each biometric verification system must be adjusted in terms of key metrics, such as the similarity rate. Testing the solution in real conditions is the best way to do this.

Image quality matters. If you verify customers remotely, focus on UX in your application to help them take an appropriate selfie on the first try.

At Regula, we rely on these principles when developing our biometric verification solution—Regula Face SDK. This is 100% on-prem software that can be fully customized to deliver the results you expect across different industries—from mobility services to major banks.

Have any questions about how we train NNs or run tests? Don’t hesitate to book a call!