Updated November 26, 2025

Since the beginning of the year, interest in deepfakes has surged. According to Google Trends, searches for “deepfake AI tool” are up 180%, “deepfake detection” by 160%, and “deepfake definition” by 150%.

While the public is still trying to grasp what deepfakes are, fraudsters have already made them part of their toolkit—along with other methods, both common and unconventional—reshaping how identity fraud works.

How are businesses responding to these threats? Are existing fraud prevention systems enough to detect and stop AI-driven attacks? Which tools still work—and which are outdated?

To explore these questions, Regula surveyed fraud prevention and financial crime professionals across four global markets— the US, Germany, UAE, and Singapore—representing companies in Aviation, Banking, Crypto, Fintech, Healthcare, and Telecommunications.

Here is what we found.

Key findings

The line between traditional and impersonation attacks has blurred: both are now common and equally active.

Smaller companies tend to face fewer AI-driven fraud attempts, while larger enterprises are exposed to more frequent and sophisticated incidents.

The finance-related sector is among the most affected by impersonation techniques, including deepfakes, biometric fraud attempts, and identity spoofing.

- A multi-layered defense strategy is more critical than ever for effective fraud detection and prevention.

What are the mainstream fraud tactics today?

In our 2022 identity fraud survey, AI-driven attacks were just emerging, and detecting synthetic identities was a top priority. Fast forward to 2024, and the threat landscape has expanded. In addition to those risks, many companies reported fake and altered physical documents among the main attacks they needed to handle.

Today, these threats have been overtaken by more advanced and varied tactics, including:

Identity spoofing

A significant percentage of organizations (34%) reported identity spoofing—using photos, videos, or other media to impersonate someone—as a common threat. Although it’s considered a traditional fraud tactic, it’s still effective. It typically involves presenting printouts, video replays, or images displayed on a screen during selfie verification.

Interestingly, this method is increasingly targeting medium-sized companies (31% of respondents) and large enterprises (39.7%), especially in the banking industry (34%). Here, spoofing is often used to open accounts linked to scams or mule networks.

Geographically, businesses in the UAE and Germany (36%) reported the highest number of such incidents over the past year.

Biometric fraud

Using fake or stolen biometric data to bypass security is another major threat, reported by 34% of businesses. This type of attack includes the use of physical artifacts—such as fake fingerprints, silicone masks, or 3D face models—to deceive biometric sensors. These methods are commonly used for account takeovers and SIM swaps.

Most of the affected companies (36%) are mid-sized and operate in healthcare (36%), finance-related and crypto sectors (both 35%). Geographically, businesses in Singapore (36%) suffered the most from biometric fraud incidents.

Deepfake fraud

Attacks involving AI-generated faces, voices, or videos to convincingly mimic or invent identities ranked among the top three threats (33%). Deepfakes are often used in presentation attacks to trick the system into believing a real person is in front of the camera. Typically, they are deployed to bypass biometric verification that relies on live video.

According to the survey, 36% of companies affected by this type of fraud were large—most of them in the fintech (38.6%), aviation (37%), and banking (33%) sectors, where video-based Know Your Customer (KYC) processes are widely used. Among all countries, the UAE reported the highest share of companies encountering deepfakes (35%).

Other common fraud tactics

Identity spoofing, biometric fraud, and deepfakes now occur just as often as traditional methods like document fraud (30%), social engineering scams (30%), and synthetic identities (29%). The latter, once considered a “brand-new” and rising threat in 2022, is now a more familiar tactic used less frequently by fraudsters.

Notably, most of these fraudulent activities target the onboarding stage of first-time customers. This makes the initial interaction between a user and a company the most vulnerable point—one that requires stronger protection. However, regular customers aren’t immune either. Their credentials and accounts remain prime targets as well.

“Fraudsters are no longer breaking in through the back door—they’re walking through the front. The verification step itself has become the primary target. Criminals create fake but ‘clean’ identities that look legitimate from day one, making downstream fraud detection nearly powerless. Onboarding is now the battleground.”

How companies fight back: Top fraud prevention approaches

The main takeaway from the respondents’ answers is that companies usually combine several tactics when building their fraud prevention and detection strategies. However, some methods are used more often than others across industries. The top five most common approaches are:

Multi-factor authentication (MFA)

As part of a layered security approach, MFA uses at least two different factors to confirm that the user accessing a system is legitimate. These factors can include biometrics, one-time passwords (OTPs), traditional passwords, security keys, or authenticator apps.

MFA is now a standard part of many digital services and workplace cultures, meaning most users are familiar with it and willing to comply. Not surprisingly, around a quarter (24%) of survey respondents said they use MFA. The approach is most commonly adopted in the UAE (29%) and Singapore (25%), while only 19% of German companies report using it.

There is also a correlation between MFA usage and company size—larger enterprises with structured security policies are more likely to implement MFA than smaller businesses. By industry, healthcare leads with 29% adoption, followed closely by crypto and banking (both at 28%). Aviation companies lag behind, with only 17% using MFA.

Behavioral biometrics

Used by 23% of respondents, this method analyzes users’ unique behaviors—such as typing speed, mouse movement, and touchscreen patterns—during their activity in a system. Tracking behavioral biometrics helps detect suspicious actions under a user account and trigger an appropriate response, including temporary account blocking if needed.

This approach is most widely adopted in the UAE (27.5%) and Singapore (23%), while German companies are less likely to use it (18%).

Interestingly, behavioral biometrics are used across companies of all sizes, with a slight increase in adoption among large enterprises. By industry, the top adopters are healthcare (26%), followed by aviation and banking (both at 23%). At the other end of the spectrum, only 13% of HR-focused organizations report using this method.

Biometric verification and authentication

Biometrics remains one of the most reliable methods for both onboarding and authentication. It works by identifying users through face, fingerprint, iris, or voice. With 22% of respondents using it, biometrics completes the top three most common fraud prevention approaches.

This method is most frequently used by companies in the UAE (27%), while Germany shows the lowest adoption rate (14%). Enterprises lead in usage, with 29% reporting implementation.

By industry, biometric verification is primarily used by telecom companies (31%), followed by banking (24%) and fintech (22%). On the other hand, only 14% of healthcare organizations use this approach.

Other anti-fraud methods in place

As mentioned earlier, companies tend to combine multiple methods for stronger protection. Many also rely on:

Dark web monitoring to catch alerts about stolen credentials or biometric data (reported by around 20% of respondents).

Geolocation and IP analysis to detect suspicious access or the use of VPNs and proxies (both used by around 20%).

Automated document verification (19.5%) to spot tampering in ID documents.

Liveness detection for selfies and fraud analytics for real-time risk scoring (both used by 19%).

Additionally, about 18% of companies are shifting toward orchestration strategies—combining multiple IDV tools, adaptive security policies, and real-time fraud alerts as part of dynamic threat prevention.

"The fraud landscape has changed dramatically, with sophisticated impersonation attacks becoming mainstream. The future of fraud prevention isn’t about relying on easy-to-deploy solutions in isolation. It’s about building resilient, layered defenses with advanced biometric verification as a backbone and orchestration tying it all together."

Are today’s tools enough to stop identity fraud?

When comparing the tools most companies now use with the setups IDV experts consider ideal, there is still a noticeable gap. The most requested additions across industries are biometric verification (23% of responses), human expert review, and MFA (both at 21%).

These priorities shift depending on the sector. For example, 24% of banking professionals see manual fraud checks by trained specialists as essential, especially for critical cases or regulatory requirements. Meanwhile, 31% of fintech companies still aim to adopt MFA more broadly.

Another key difference is the level of IDV automation. Today, 44% of companies say 51-75% of their identity processes are automated. This figure holds steady across businesses of all sizes.

Regionally, UAE-based companies lead in automation at 48%, though the number remains above 40% in all surveyed countries. By sector, telecom (54%) and healthcare (52%) show the highest levels of automation within the 51-75% range.

Still, one in three organizations worldwide (30%) see nearly full automation as the goal. This ideal is even more common in healthcare (53%), as well as in aviation and crypto sectors (both 30%).

When it comes to what’s holding companies back, the most common hurdles aren’t technical—they’re organizational:

Budget constraints (25% of all respondents)

Regulatory compliance (23%)

Departmental inconsistencies (23%)

These aren’t about the lack of tools, but rather internal budget limits, operational issues, and external regulatory pressure.

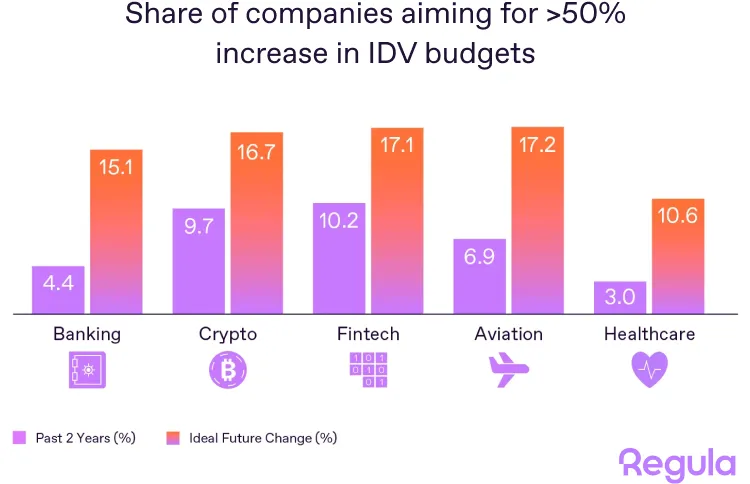

There’s hope for progress, though. Most companies are planning to grow their IDV budgets. In the past two years, 35% reported a 10-20% budget increase. Looking ahead, 32% believe a 21-50% boost would be ideal. This ambition varies across industries, but the upward trend is clear.

As more companies lean on AI to run their systems, the price of weak identity checks keeps climbing. It’s not just about fraud anymore—one mistake can lead to fines, headlines, and broken trust. That’s why more businesses now see trust not as a nice-to-have, but as something they need to build into their systems from the ground up.

“Executives have finally woken up to the deepfake economy. They’re realizing that identity verification isn’t a cost of doing business anymore, but a growth engine. Just as cybersecurity became non-negotiable a decade ago, identity verification is now core infrastructure for trust in the AI era.”

Get more insights on preventing IDV fraud!

Download the full report—free, with no strings attached. No emails, no calls. And if you’ve got questions, we’re happy to talk.