The role of age estimation and verification is ever expanding, as more and more laws are enacted worldwide to impose age gating. For example, this year’s Online Safety Act has put new duties on social media companies and search services to protect their United Kingdom users from content harmful to children.

Respectively, so grows the role of age verification software and how accurately it can operate. When looking for authoritative performance data, buyers and policymakers often refer to NIST's Face Analysis Technology Evaluation for Age Estimation & Verification (FATE-AEV).

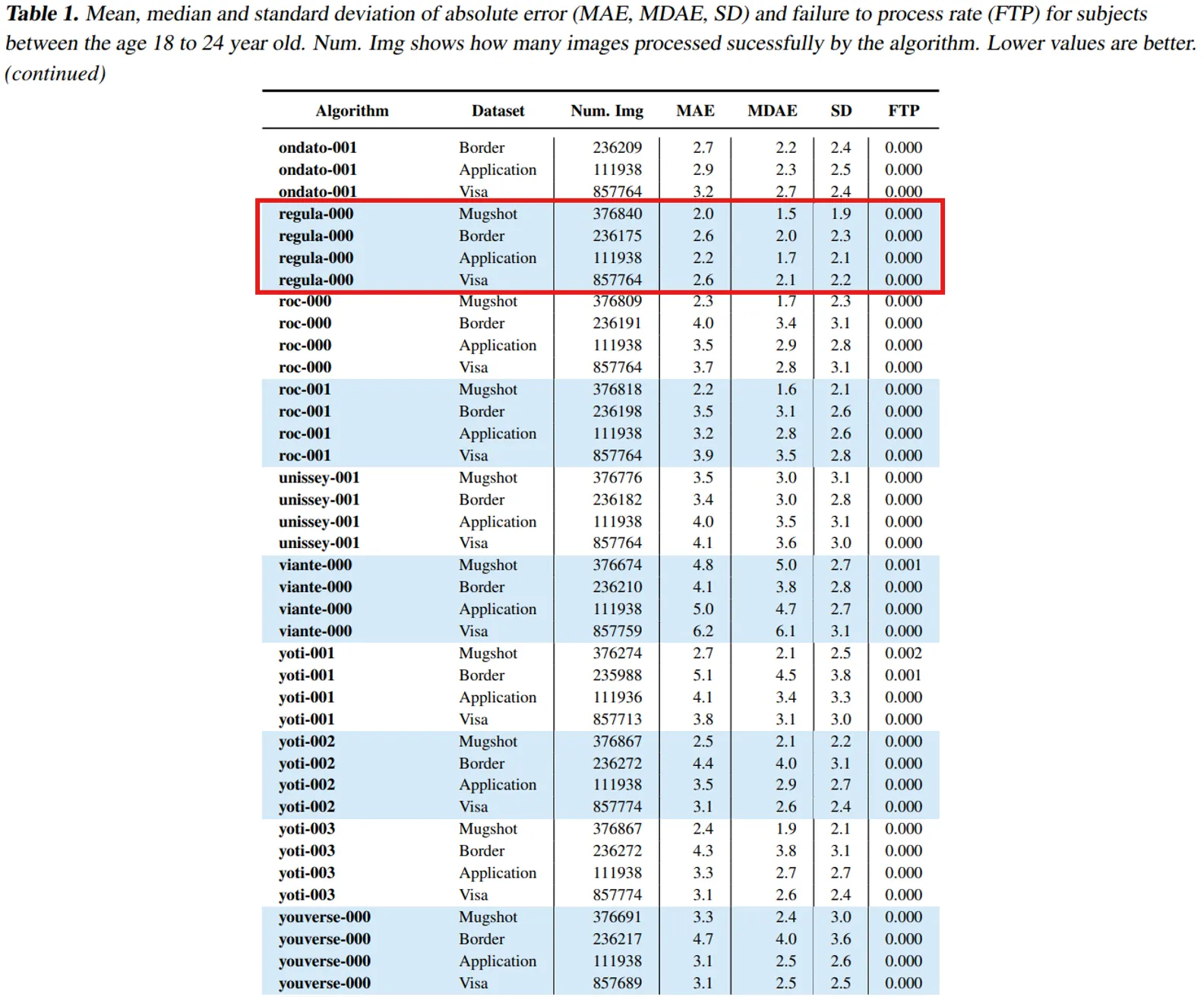

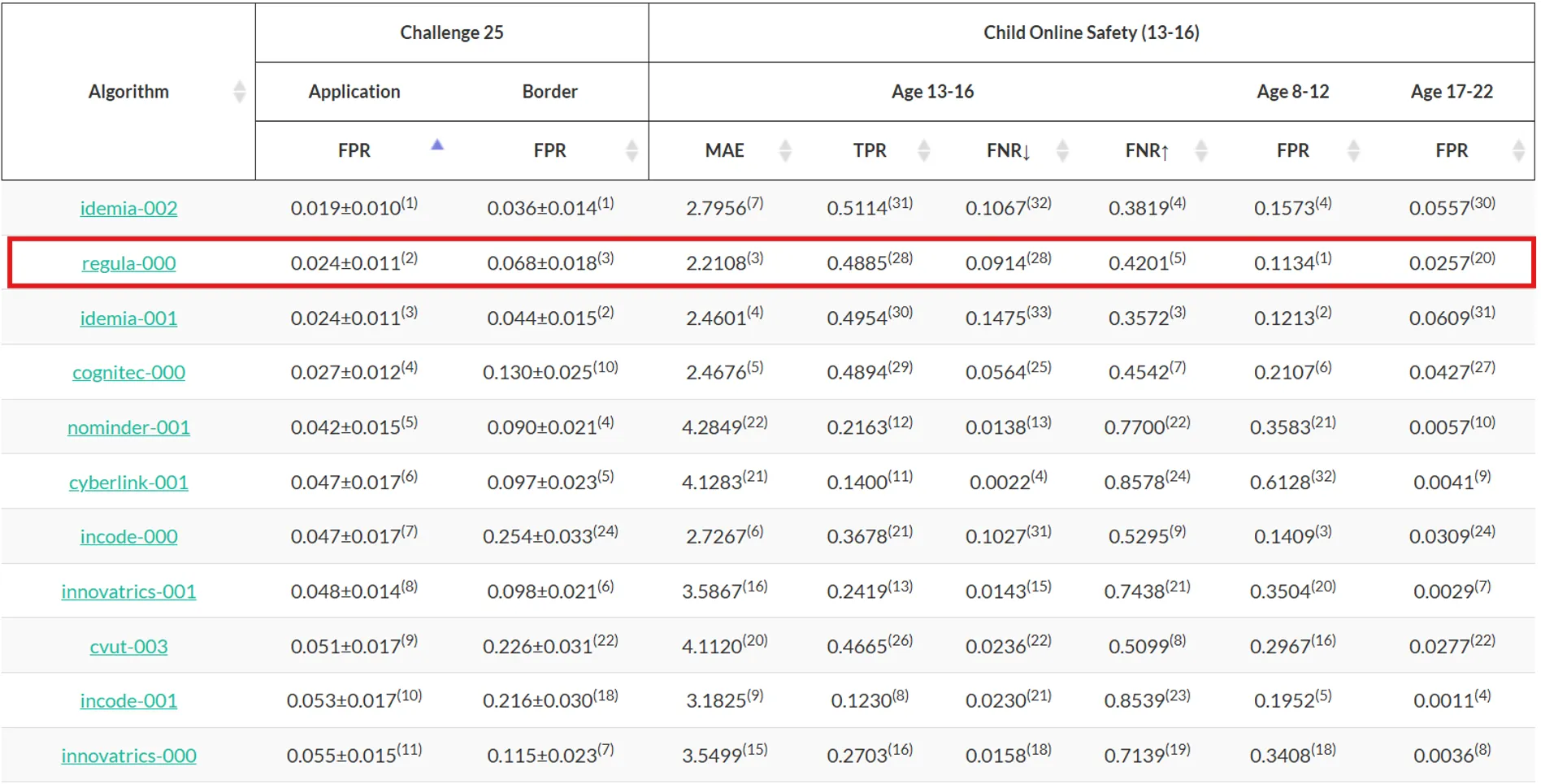

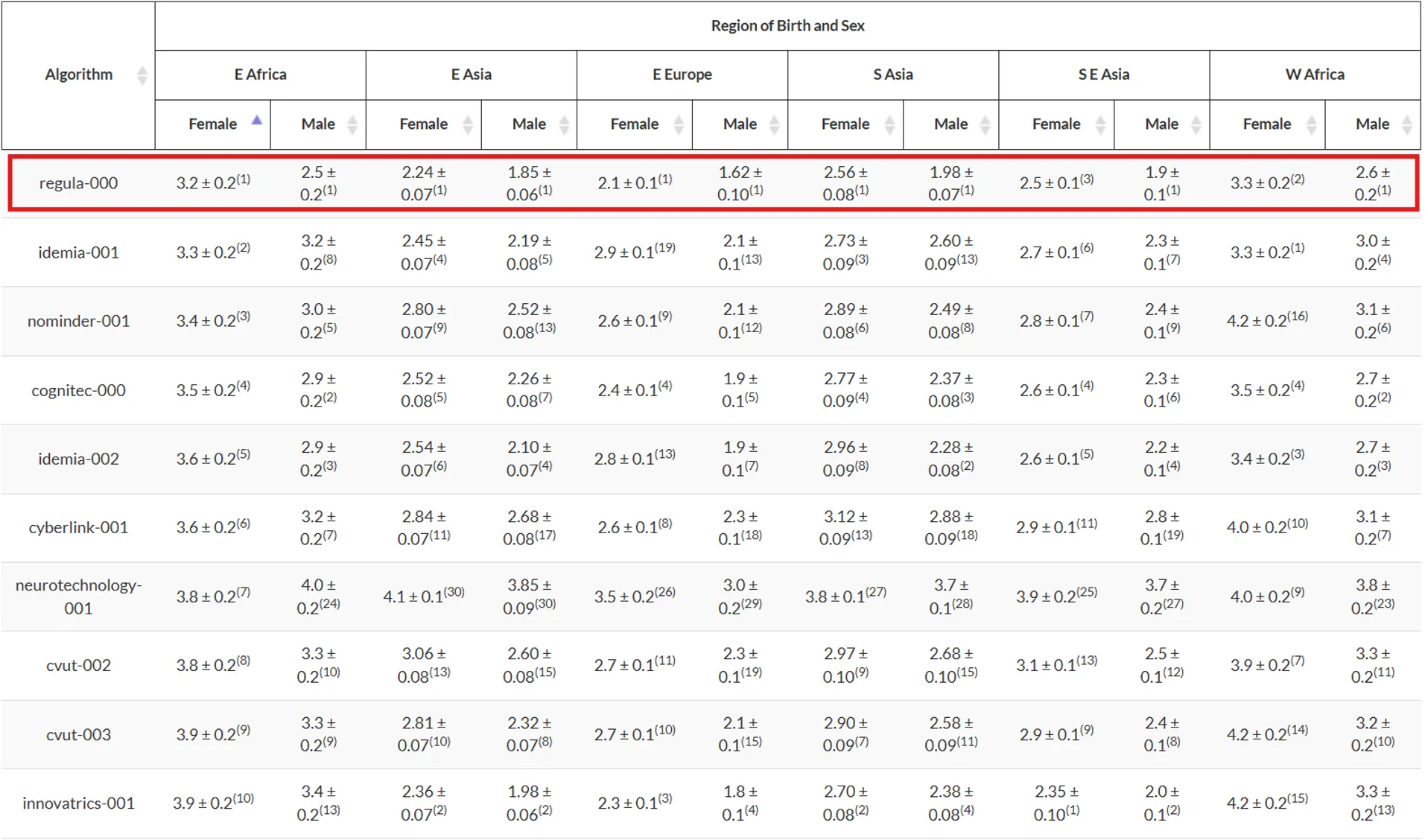

In the latest edition of FATE-AEV, Regula Face SDK has topped the list of ID verification vendors as the most accurate estimator across six geographic regions.

What does this mean in practice? What other metrics matter? What does NIST measure overall?

We will answer these questions and more as we break down NIST’s methodology and output.

Get posts like this in your inbox with the bi-weekly Regula Blog Digest!

What is NIST, and what do they test?

The US National Institute of Standards and Technology is a federal science lab that has been engaged in performance testing and methodology development since 1901. Essentially, NIST builds fair tests, runs them under controlled conditions, and publishes its findings for a wider audience to analyze the performance of different vendors and their solutions.

In the case of FATE-AEV, NIST runs technology evaluations where developers submit modules for age estimation and verification. The institute executes those modules inside its own infrastructure, publishes the methodology and metrics, and updates results as new entries arrive.

The results of FATE-AEV are important for three groups: deployers looking for implementation, developers looking for factor analysis, and policymakers who need to know whether measured capability fits a specific use case.

What exactly is being tested?

NIST runs tests on two related tasks:

Age Estimation (AE): The tested module outputs an estimated numeric age for a single face image. NIST computes how close predictions are to the ground truth across different datasets and cohorts.

Age Verification (AV): The tested module outputs a yes/no decision relative to a legal limit. NIST quantifies how many minors would pass unchallenged and how many adults would be unrightfully challenged.

The data NIST uses

The evaluation runs on four very large operational archives with ground-truth age. Some datasets also include sex and country-of-birth labels. The sources are:

US mugshots after arrest.

Application photos captured in immigration offices.

Mexican visa photos.

US border-crossing webcam captures.

That mix matters because image quality, pose, optics, and age distribution differ across archives. According to NIST, accuracy varies by dataset, and rankings can shift when you change the dataset.

The most important NIST metrics

Some information found in NIST reports is worth more scrutiny than others; in this section, we have handpicked the most important metrics to pay attention to:

Accuracy metrics for estimation

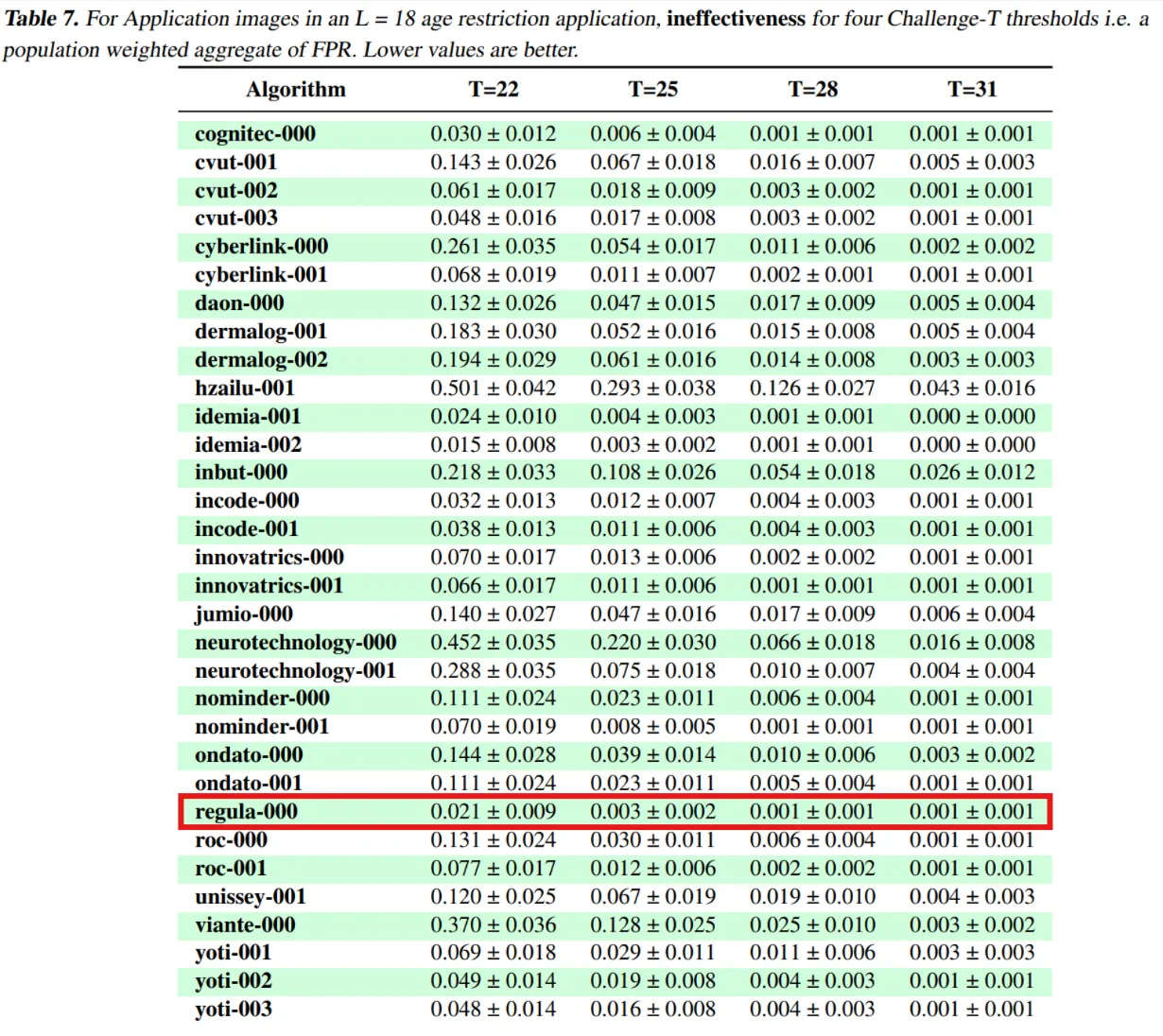

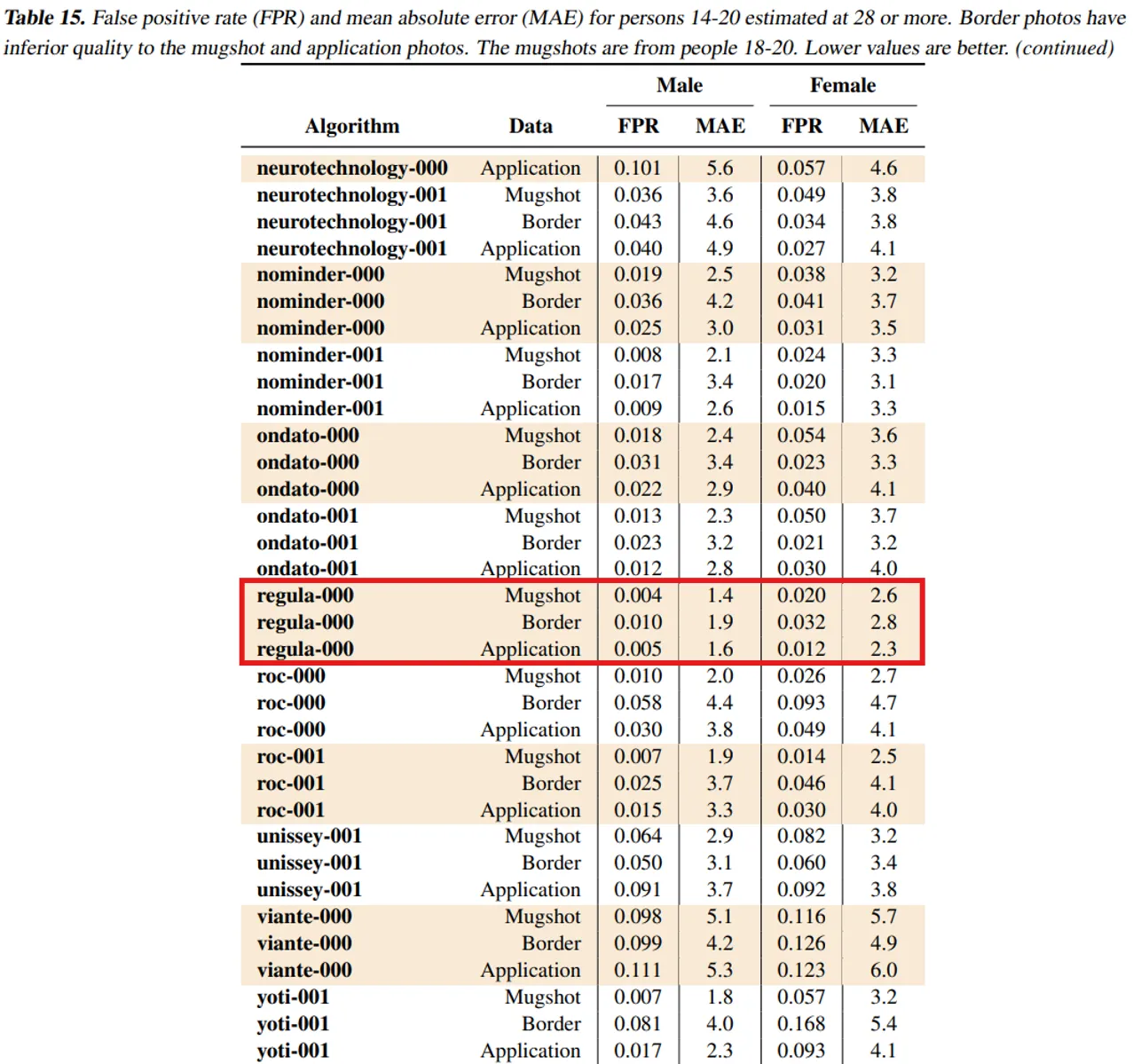

Mean absolute error (MAE): The average number of years the prediction is off across all samples. A lower number means the guesses sit closer to true age in that dataset, but it can hide weak spots in certain groups or image types.

Median absolute error (MDAE): The middle “years off” value once all errors are sorted (half the errors will be smaller than this number, half will be larger). It’s steadier than MAE when a few cases are very wrong, but it can conceal how large those rare mistakes are.

Standard deviation of absolute error: A measure of how tightly errors cluster around the average. Two systems with the same MAE can feel different if one has a much wider spread of errors.

Mean error (bias): This shows whether estimates lean old or young on average. An estimator that leans old might challenge more adults near a limit, while one that leans young might let more minors through.

Failure to process (FTP): The share of images that return no usable result. Even with good accuracy, high FTP pushes more users into slower fallback checks. The value matters operationally because “no result” often triggers a slower fallback path.

Challenge-T: The deployment knob

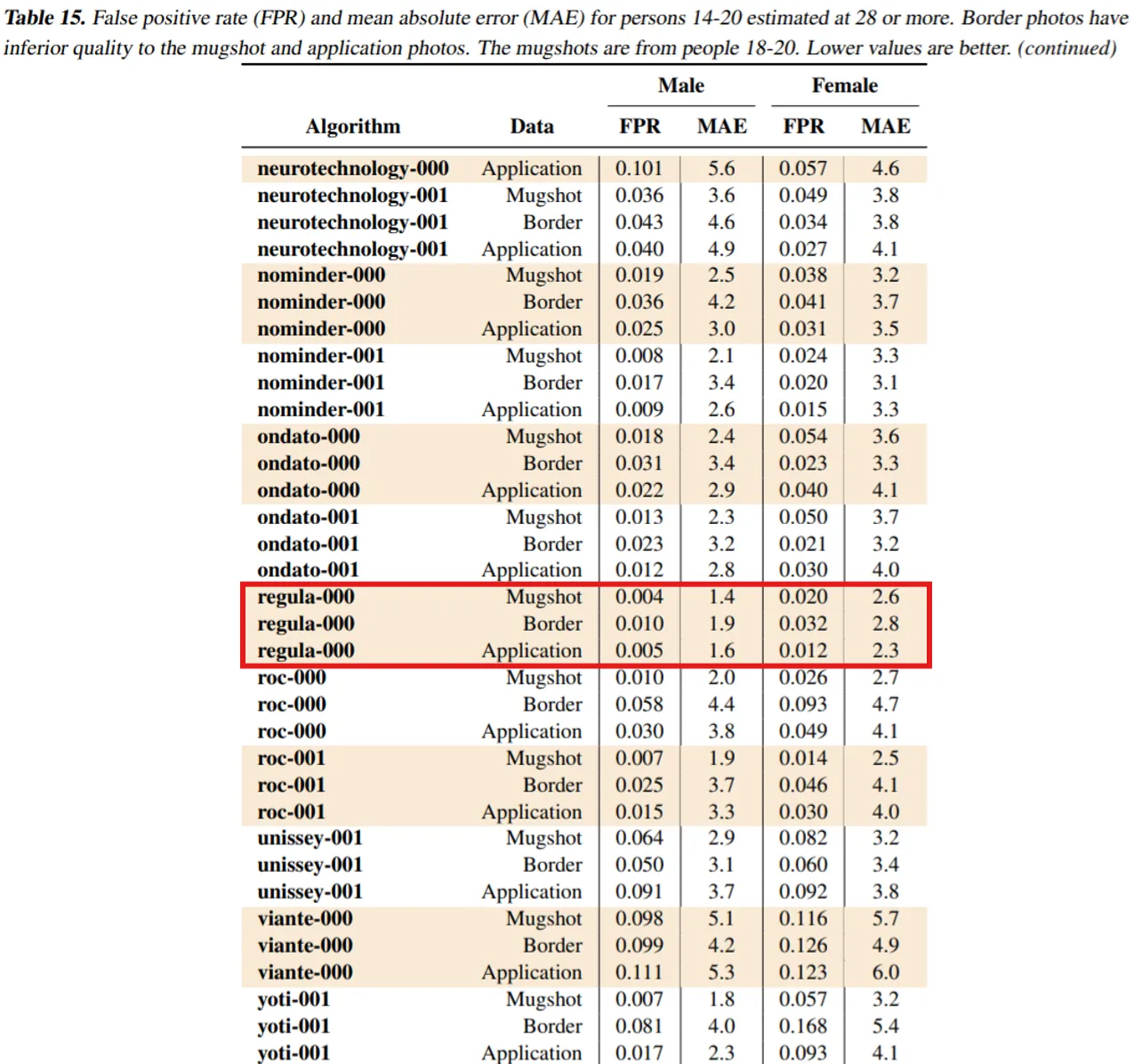

Challenge-T is the essential bridge from lab numbers to policy tradeoffs, and T itself represents a given threshold that checks are made against. It’s recommended by NIST that T be higher than the legal restriction age (L) by a certain margin: for example, for L = 18, T = 25 is common. In the latest report, NIST uses multiple T’s: not only 25, but also 22, 28 and 31.

If AE ≥ T, no extra checks are made (subject passes as adult/above threshold); If AE < T, a second check is required (e.g., document reading or human review).

NIST reports:

False positive rate (FPR) under Challenge-T: Minors estimated at or above T who would pass unchallenged. Lower is safer.

False negative rate (FNR) under Challenge-T: Adults estimated below T who would be challenged. Lower is smoother for users.

Ineffectiveness and Inconvenience: Composite rates that weight the above by the age distribution and by how likely people of each age are to attempt the action.

Variability metrics and pose sensitivity

NIST quantifies frame-to-frame noise on frontal videos and derives a yaw-sensitivity statistic from head-turn videos. The yaw coefficient can be read as “added error for a 90-degree rotation,” with the caveat that the test clips rarely exceed 60 degrees. If your flow allows short clips, average across frames to cut noise. If side-on views are common, use a slightly higher T or guide users back to frontal.

Capacity and performance context

NIST also publishes implementation details that matter in engineering discussions: model file sizes, library sizes, and single-core CPU timing on 640×480 images. Processing time varies by an order of magnitude across entries in the table, which can inform where you run the estimator and how you batch work.

How to use NIST’s age estimation guidance for ID verification

The accuracy of age estimation is becoming increasingly important, as more and more use cases for this procedure arise. Alcohol and tobacco sales, adult-content gates, courier hand-offs, teen safety features, gaming payouts—all of these are contexts where age estimation and verification play a key role.

Legislation is also advancing: for example, this year’s Online Safety Act has put new duties on social media companies and search services to protect their United Kingdom users from content harmful to children.

So now let’s break down the data in NIST’s report in greater detail to see what to look out for:

In-store and point-of-sale age check

Scenario: A kiosk or tablet captures a selfie before checkout. The system computes AE and compares it to T, which is above the legal limit. If AE ≥ T, proceed. If AE < T, ask for a document scan or a human check.

How to use FATE-AEV’s guidance: Pick T using the Challenge-T table in the dataset that matches your context (for L = 18, look at performance with T = 25) and compare performance values. Lower values are better.

Operational tip: A pre-capture prompt that positions the face frontally and removes glasses can cut error with minimal friction.

Alcohol delivery and courier-verified hand-offs

Scenario: At order time, AE runs as a pre-screen. If AE is below T, the user is told that proof will be needed at the door and the workflow pivots to document capture or NFC. Couriers also get a clear signal about whether to expect a check.

How to use FATE-AEV’s guidance: With L = 18 and T = 25, make use of the Ineffectiveness table; this would be your population-weighted rate of minors who would get access to restricted goods without a challenge. Lower values are better.

Online platforms that offer under-18 safety modes

Scenario: During sign-up, AE routes clear minors to supervised or restricted modes. Ambiguous cases go to a secondary check. Some services repeat the check at key moments, like turning on monetization or joining adult communities.

How to use FATE-AEV’s guidance: This is a scenario where image quality may play a part: a cheap webcam can produce an image that will skew results. Pay close attention to how solutions performed with different types of image qualities (border photos = lower quality, mugshots and applications = higher quality). Lower values are better.

Operational tip: Monitor outcomes by sex and region because the report shows rankings and error shifting with those slices. Use the same habit in reviews that you would use for face verification, where the identity guidelines set explicit demographic limits in the verification context.

The main technical challenges of age estimation

We can highlight four key hurdles for age estimation software and ID verification vendors:

The aging process differs across ethnicities or life stages

Aging cues differ by sex and by regional cohorts. Skin texture, facial hair, hairstyle habits, and bone structure change what the model can “read.” That’s why it’s important to have a solution suitable for all demographics, especially if your customer base is global.

Age mix matters as well: estimates for infants on the visa set are often very close, while error rises through adulthood and later life. This is why a single overall average can be misleading if your users are not evenly distributed across groups and ages.

Capture differences swamp model skill if you ignore them

Mugshots and application photos can look studio-like; however, border imaging is often webcam-like with mixed lighting and off-axis faces. NIST highlights that error rises on border images for many algorithms, even for the same subjects, so optics and pose can seriously affect difficulty.

Moment-to-moment wobble and head yaw

NIST quantifies frame noise on frontal videos and sensitivity to head turns, then publishes simple statistics that reflect “how jittery” the estimates are and how much error grows as yaw increases. Those figures matter for user experience. If your flow captures a short clip rather than a still, you can average across frames to reduce jitters; however, if your traffic often includes angled poses, you should expect higher error.

Glasses and small presentation details

Glasses shift the estimates for many systems. NIST’s paired analysis compares the same person with and without glasses on border imaging and reports measurable deltas. A short camera prompt that asks users to remove glasses can be worth the extra second in order to minimize false challenges.

Regula is one of NIST’s top performers

NIST’s FATE-AEV provides decision-makers with reputable and repeatable measurements on common AE/AV data, and Regula sits at the top of a few performance charts. Namely, Regula ranked among the top three in two of the most critical age assurance scenarios: Challenge 25 and Child Online Safety (ages 13–16).

This means that Regula’s technology both excels in estimating real age and in protecting minors from restricted access items and areas.

Regula Face SDK is a cross-platform biometric verification solution that can support your payment process with:

Advanced facial recognition with liveness detection: The SDK uses precise facial recognition algorithms with active and passive liveness detection to verify users in real time, preventing spoofing attacks that use photos or videos.

Age estimation: The SDK estimates the user’s age with high accuracy across all racial demographics, protecting minors and businesses alike.

Face attribute evaluation: The SDK assesses key facial attributes like expression and accessories to improve accuracy and security during identity verification.

1:1 face matching: The SDK matches the user’s live facial image to the main portrait in the identity document (primary or secondary, e.g., in the RFID chip), verifying identity at a 1:1 level.

1:N face recognition: The SDK scans and searches the user’s facial data against a whole database, such as a blacklist.

Adaptability to various lighting conditions: The SDK operates effectively in almost any ambient light.

Have any questions? Don’t hesitate to contact us, and we will tell you more about what Regula Face SDK has to offer.