Over the past six months, we've had several conversations with prospects about whether ChatGPT can be used for identity verification.

The short answer is, "No, you can’t."

For a more detailed explanation, read on.

Addressing the source

The first natural step was to ask ChatGPT itself.

The answer is no surprise. Why? There are at least three reasons for that.

Get posts like this in your inbox with the bi-weekly Regula Blog Digest!

ChatGPT is not intended for identity verification

OpenAI itself explicitly states its tool must not be used for identity verification. Their usage policies, among other things, state the following:

1. Don’t compromise the privacy of others, including:

Collecting, processing, disclosing, inferring or generating personal data without complying with applicable legal requirements

Using biometric systems for identification or assessment, including facial recognition

(...)

2. Don’t perform or facilitate the following activities that may significantly impair the safety, wellbeing, or rights of others, including:

(...)

b. Making high-stakes automated decisions in domains that affect an individual’s safety, rights or well-being (e.g., law enforcement, migration, management of critical infrastructure, safety components of products, essential services, credit, employment, housing, education, social scoring, or insurance)

(...)

They also have the following service-specific policies in addition to the Universal Policies:

1. Don’t compromise the privacy of others, including:

(...)

Soliciting or collecting the following sensitive identifiers, security information, or their equivalents: payment card information (e.g. credit card numbers or bank account information), government identifiers (e.g. SSNs), API keys, or passwords

(...)

Violations can result in actions against your content or account, ranging from warnings to restrictions. This is just the first reason ChatGPT should not be employed for an identity verification mission.

ChatGPT lacks capabilities for identity verification

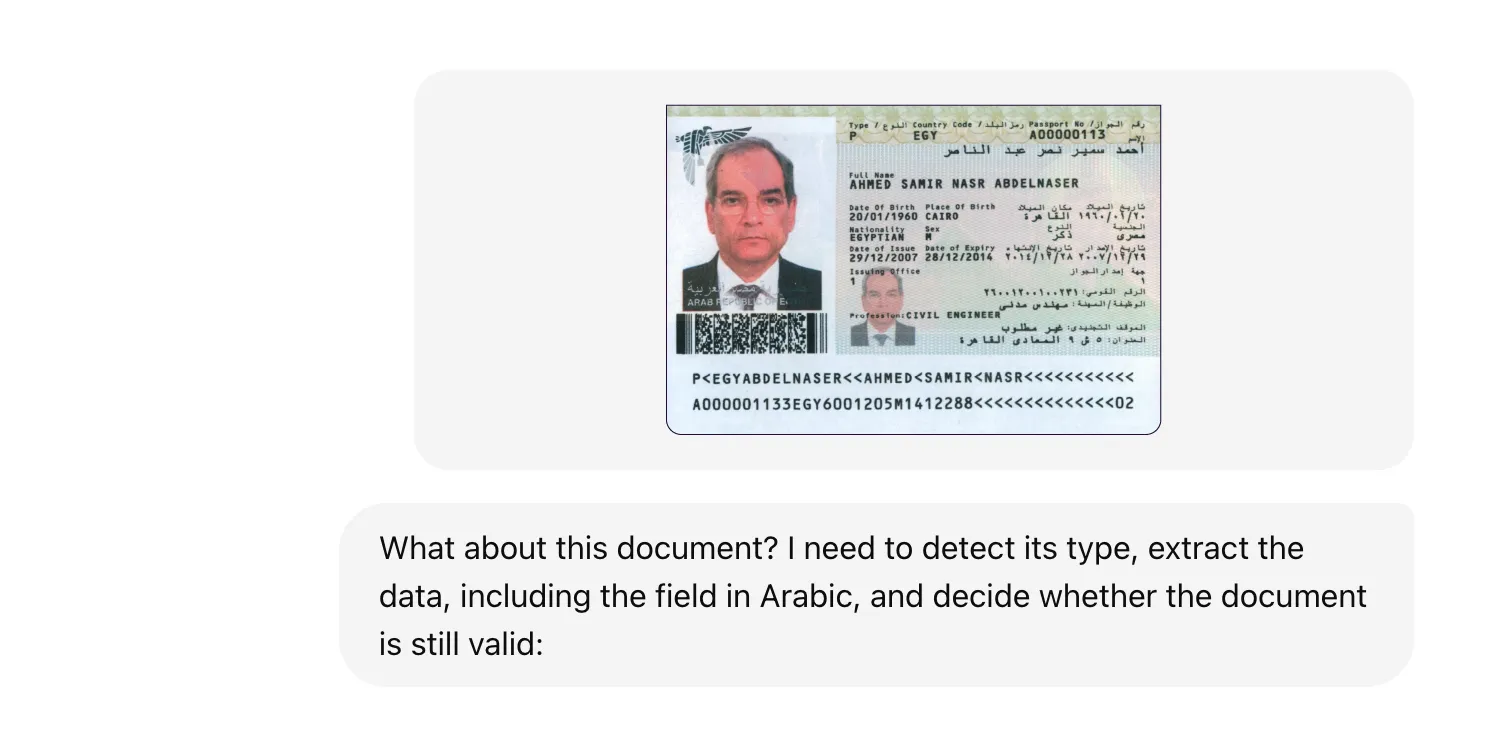

Let’s go for a short practical experiment with GPT-4o, the currently newest and most advanced model. For it, we’ll use a passport specimen from our recent post about Egyptian documents. Here’s it:

Here’s what we got:

As you can see, ChatGPT is pretty good at OCRing stuff. Additionally, we asked to extract the encoded information:

Not bad. So, de-facto ChatGPT is capable of extracting personal information, including from the MRZ, and does this pretty accurately. It also managed to identify the barcode type, even though it couldn’t pull out its contents.

However, when it comes to actual verification and authentication, there are no technical capabilities to examine security features or run any authenticity checks.

The tool hasn’t detected that the submitted passport was actually a specimen

The tools purposely designed for remote identity verification, however, can detect subtle signs of forgery.

| Feature | ChatGPT | Specialized IDV tools |

|---|---|---|

Document type detection | Can detect a general type (e.g., a passport of Egypt). | Can identify the exact document type and series (e.g., a passport of Egypt, 2008 series). |

Text data detection | Yes | Yes |

Security feature detection | Can detect only text/numeric data like the one in a visual zone or MRZ. |

|

Template reading and validation | Relies on web-found or user-uploaded files. | Uses algorithms to recognize each document's structure and data based on extensive preparation and forensic examination. If anything doesn’t match or the document is a specimen, it will be instantly identified. |

Cross-checking | No | Identifies inconsistencies, alterations, or tampering that might not be visible to the naked eye. |

Integration with external databases | No | Integrates with official databases to cross-reference document information and ensure its validity. |

Facial detection and recognition | No | Compares multiple photos in the document between each other and with a live selfie or video to confirm the identity of the holder. |

Electronic document processing | No | Can read and verify an electronic chip in a document. |

An important note here is that we don’t imply that ChatGPT cannot be taught to do all these things. On the contrary, it potentially could. However, this would bring us again to the thin ice of handling personal data, growing concerns about privacy, and responsible use of AI.

The use of artificial intelligence in the EU will be regulated by the AI Act, the world's first comprehensive AI law. It takes effect on August 1, 2024, with different parts being implemented over the next 6 to 36 months.

In particular, it prohibits the use of real-time remote biometric identification systems in publicly accessible spaces for purposes other than law enforcement in a limited list of situations.

Uploading users’ IDs to ChatGPT might violate privacy laws

Last but not least, handing over users’ identity documents to a third-party service like ChatGPT could be simply illegal. Here’s just one potential situation:

Under European and American laws, users have the right to request the deletion of all their personal data. Indeed, section 6 of the OpenAI Privacy Policy confirms that you can delete your personal data from their records. However, this can become a problem if you process millions of other people’s personal data in this way.

When you send data to such a service, it goes to its developer and is stored on their servers. Major services openly state that the content they generate can be used in datasets to train future models. While this doesn’t directly include the information you upload, it does get used in responses, meaning it remains partially accessible.

If you use a paid version of ChatGPT, you can opt out of having your content included in these training datasets. However, it's important to remember that even if you opt-out, your data still stays on the company’s servers and can potentially be manually reviewed.

Why is the responsible use of ChatGPT and personal data extremely important?

At this point, it’s critical to understand ChatGPT's complexity.

The thing is that ChatGPT isn't just a single model but an extensive system that integrates numerous modules and models to perform specific actions. It's not simply trained once but evolves by leveraging various subsystems.

The process is akin to a growing tree, branching out to involve many subsystems, each contributing to finding solutions to diverse tasks: writing text, code, generating images, or processing information. This interconnectedness is vast, almost like an entire city working together.

Consider this analogy: living in a city with cameras everywhere recording your every move. You go about your daily activities unaware of how this data is processed, analyzed, and stored. Eventually, someone could query a ChatGPT-like system about your whereabouts, and it could deduce where you work based on patterns in the data. This demonstrates the significant power and potential misuse of aggregated data.

Furthermore, people upload sensitive information like medical records, which means ChatGPT could know detailed health information. Insurance companies might exploit this, increasing premiums based on health data, leading to unintended and potentially harmful consequences.

The main concerns are personal data leaks and the unpredictable ways in which your data could be later used. Much like a butterfly effect causing a tsunami, small actions in data handling can lead to significant, far-reaching impacts.

Don’t identity verification providers use AI? Isn’t it the same thing? Is it safe?

It’s a fair question. As we've noted in previous posts, modern identity verification heavily relies on neural networks. However, it's important to distinguish between different types of AI.

Let’s use a liveness check as an example of a technique that leverages AI. Identity verification providers train AI models specifically for tasks like these. Think of these models as specialized tools, like a hammer designed for a specific job. They perform narrowly defined tasks efficiently and securely. The data processed during a liveness check is only used for liveness checks and nothing more.

In contrast, ChatGPT is more like a sophisticated multitool. It's designed to understand and generate text, but it can also integrate various models to accomplish a wide range of tasks, from coding to drawing images. This universality means it can handle more complex interactions but also poses greater risks when it comes to handling personal data.

When using a system like ChatGPT, personal data inevitably flows into other interconnected tools within the ecosystem. This could lead to potential misuse or unauthorized access, making it less secure compared to specialized AI used in identity verification.

Thus, while AI in identity verification is generally safe due to its specific and controlled application, using a generalized AI like ChatGPT for such tasks introduces significant risks. It's crucial to ensure that personal data is handled by systems specifically designed and regulated for that purpose to maintain security and compliance.

.webp)