Last year saw the EU Artificial Intelligence Act (AI Act) officially enter into force—and as of mid-2025, it has outlawed the worst cases of AI-based identity manipulation and mandated transparency for AI-generated content. This came at a critical time, as 2024 deepfake statistics showed that half of all businesses have experienced fraud involving AI-altered audio and video.

However, the EU AI Act doesn’t stand alone in its fight against AI identity fraud, as more new anti-deepfake laws are being passed all over the world. In this article, we will explore these laws: from the US to China, we will look at the most prominent AI/deepfake regulations in 2025. And, at the end, Regula's CTO, Ihar Kliashchou, will share his view on what should go hand in hand with legislation to effectively fight against AI identity fraud.

Subscribe to receive a bi-weekly blog digest from Regula

Denmark’s deepfake law

One of the most talked-about laws against AI deepfakes comes from Denmark: the government has amended its copyright law to ensure that every person “has the right to their own body, facial features and voice.” In effect, Denmark is treating a person’s unique likeness as intellectual property—a first-of-its-kind approach, at least in Europe. With broad cross-party support, the proposal was introduced for public consultation in mid-2025 and is expected to be passed by late 2025.

Under this amendment, any AI-generated realistic imitation of a person (face, voice, or body) shared without consent would violate the law. Danish citizens would have a clear legal right to demand takedown of such content, and platforms that fail to remove it would “face severe fines,” according to Denmark’s culture minister Jakob Engel-Schmidt.

More than a lifelong warranty

Notably, performers and regular individuals alike would enjoy protection extending 50 years after their death for unauthorized AI reproductions of their work. This way, a Danish citizen or their family (after their passing) who finds their face misused in a deepfake scam can force its removal and then pursue the platform for compensation. However, the law does make exceptions for parody and satire, as they remain permitted.

The Danish government has also signaled it will use its EU Council presidency in late 2025 to push for similar measures across Europe. In other words, Denmark’s national experiment could become a blueprint for deepfake regulations elsewhere in the years to come.

The United States’ TAKE IT DOWN Act

Until recently, the United States has addressed deepfakes primarily through state laws and civil lawsuits. However, in May 2025, the TAKE IT DOWN Act was signed into law, marking the first U.S. federal law directly restricting harmful deepfakes.

A name with a double meaning

Interestingly, “TAKE IT DOWN” is an acronym standing for “Tools to Address Known Exploitation by Immobilizing Technological Deepfakes on Websites and Networks Act”).

The TAKE IT DOWN Act focuses on non-consensual intimate imagery and impersonations: deepfake pornography, sexual images, or any AI-generated media falsely depicting a real person in a harmful way. The legislation also makes it a crime to knowingly share nude or sexual images of someone without consent, including AI-generated fakes. Penalties include monetary fines and custodial sentences of up to three years; the maximum applies in aggravated circumstances such as prior offences or distribution with intent to harass.

Moreover, the law doesn’t only punish the initial perpetrators; it also imposes obligations on platforms to act when such content is flagged. According to the new law, if someone finds an explicit deepfake of themselves, online platforms are now required by federal law to remove it within 48 hours of a report. By May 2026, any platform that hosts user content and could contain intimate images must have a clear notice-and-takedown system in place.

As of August 2025, there are a number of other legislations pending as well, aimed to support the TAKE IT DOWN Act:

Disrupt Explicit Forged Images and Nonconsensual Edits (DEFIANCE) Act, re-introduced May 2025 after an earlier version passed the Senate in July 2024 but expired at the close of the 118th Congress. It would give victims of non-consensual sexual deepfakes a federal civil cause of action with statutory damages up to to $250,000.

Protect Elections from Deceptive AI Act, introduced 31 March 2025. It would prohibit the knowing distribution of materially deceptive AI-generated audio or visual material about candidates in federal elections.

NO FAKES Act, introduced 9 April 2025. It would make it unlawful to create or distribute an AI-generated replica of a person’s voice or likeness without consent, with limited exceptions for satire, commentary and reporting.

China’s AI content labeling regulations

In March 2025, Chinese authorities introduced the Measures for Labeling of AI-Generated Synthetic Content, a set of rules that will come into effect on 1 September 2025. The legislation builds upon the regulations of 2022-2023, and establishes a traceability system for all AI-generated media.

Under the 2025 measures, there are now mandatory technical requirements: any AI-generated or AI-altered content, of any type (image, video, audio, text, virtual reality), must be labeled as such. More specifically, there are two ways of labelling:

Visible: e.g., a watermark on an image or a caption in a video indicating the content is synthetic.

Invisible: e.g., an embedded digital signature or mark in the file’s metadata that can be detected by algorithms even if the visible label is removed.

This way, if a Chinese app allows a user to face-swap themselves with a celebrity in a video, that resulting video must have both a visible notice and an encrypted watermark in its code. Likewise, content websites now have a responsibility to look for these watermarks: if a piece of content has none, the platform is required to get the user to declare that it is AI-generated. China’s law also bans the alteration of AI watermarks, effectively outlawing any tools for removing those identifiers.

If all else fails, and a piece of content that is suspected to be AI-made is left unmarked, it will be labeled as “suspected synthetic” for viewers.

France’s AI content labeling regulations (in progress)

Similar to the previous example, the French National Assembly introduced Bill No. 675 to mandate clear labeling of AI-generated or AI-altered images posted on social networks in late 2024. By early 2025, this proposal gained momentum: users who fail to label their AI-altered photos or videos would face fines up to €3,750, and platforms that neglect their detection or flagging duties could incur fines up to €50,000 per offense. However, it has not been adopted yet, as government talks continue.

What has been adopted (albeit in 2024) is the new Article 226-8-1, which amended the Penal Code to criminalize non-consensual sexual deepfakes. It punishes making public, by any means, sexual content generated by algorithms reproducing a person’s image or voice without consent. Possible penalties include up to 2 years’ imprisonment and a €60,000 fine, with higher thresholds in some specific contexts.

Developments in the United Kingdom’s Online Safety Act (in progress)

In the UK, 2025 has been the year of implementing the Online Safety Act 2023, a law that targets harmful online content. While the Act itself was passed in late 2023, many of its key provisions kicked in during 2024 and 2025—and some refinements are being made as well.

More specifically, the UK has tightened laws regulating deepfakes and intimate image abuse. Earlier, the Online Safety Act 2023 had already made it illegal to share or threaten to share intimate deepfake images without consent, but it did not cover the creation of such content. The proposed 2025 amendments, however, target creators directly: intentionally crafting sexually explicit deepfake images without consent, and with intent to cause alarm, distress, humiliation, or for sexual gratification would be penalized with up to two years in prison.

New age verification rules for adult sites

Since 25 July 2025, dedicated adult sites have been required to carry out “highly effective age assurance” to block under-18 users from accessing their content. In just the first five days after the law took effect, there were an extra 5 million checks per day, with document scans and credit card checks among the methods used.

It’s a significant cultural shift from simply clicking “Yes, I am 18+” on a website, and it’s expected to have its side effects as well: the new rules drove many users toward VPNs, with downloads spiking over 1,800%.

An insight from Regula's CTO: laws not enough to beat AI fraud

AI is driving a new era of fraud—one that doesn't just fool machines, but people. It is now persuasive enough to trick people into handing over passwords, approving transfers, or sharing credentials. And because many of these scams cross borders, regional laws rarely work, and penalties rarely catch the perpetrators. People lose their savings, businesses face losses, and institutions struggle to keep trust intact.

Our defenses haven’t always kept pace, either. Regulations that outlaw malicious use of deepfakes may look good on paper, but without the tools to detect and prove synthetic content, they’re toothless. How would speed limits help if there were no radar guns or police to enforce them? The same logic applies here.

The most effective response will require more than legislation. It demands a universal approach: stronger verification technologies to catch fake identities at critical checkpoints, paired with public education to help people recognize the red flags. Because when deepfakes look real, sound real, and pass basic checks, the only thing standing between a person and fraud is their ability to recognize the red flags—and act accordingly.

Technology can detect what humans can’t, but humans still need to be equipped to detect what technology misses. One without the other simply won’t hold.

Fighting deepfakes with Regula’s solutions

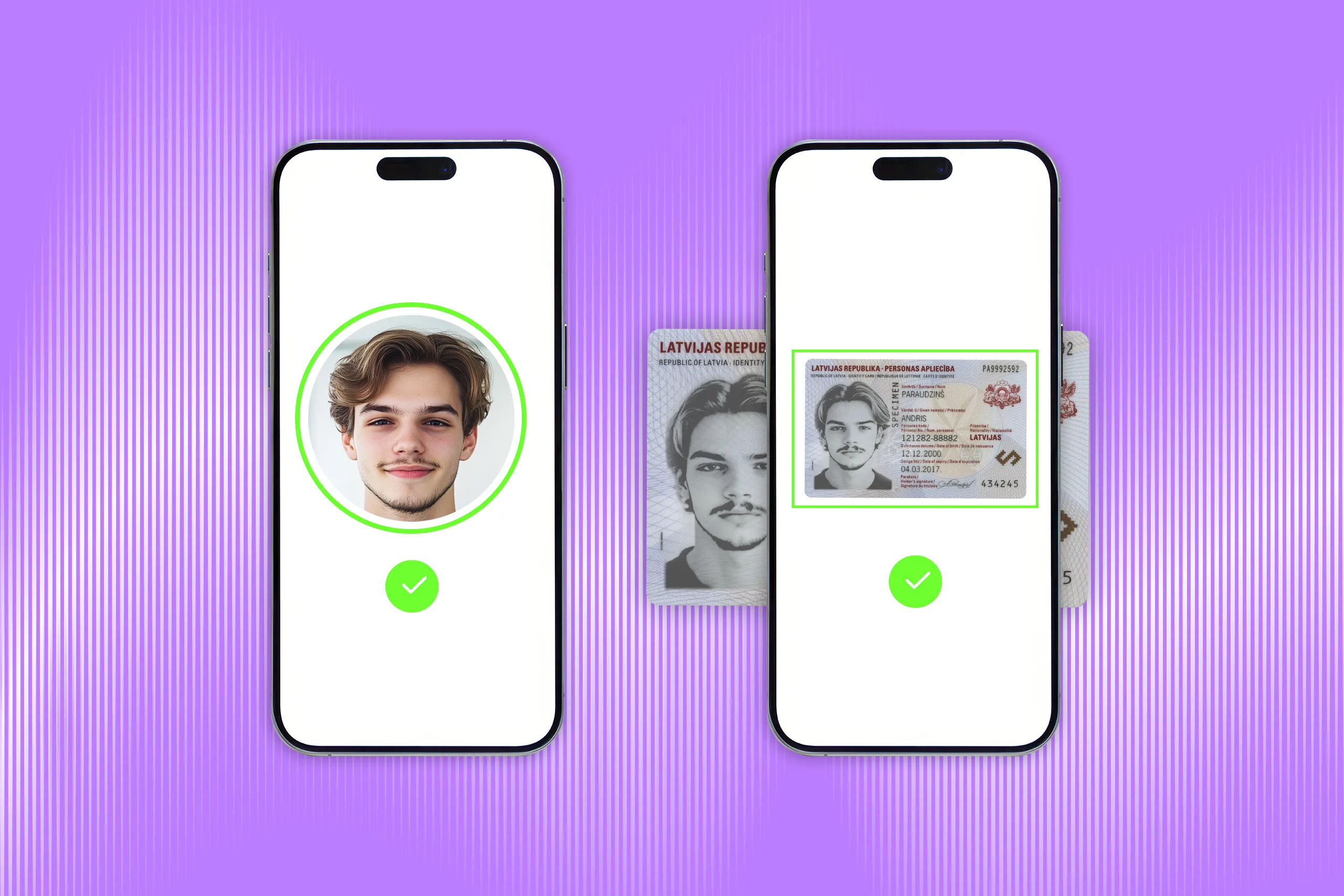

The influx of new deepfake laws in 2025 is a signal that governments expect stronger measures against identity fraud. From a technological standpoint, we are seeing a major push toward advanced solutions with liveness detection, which can still detect most deepfakes.

One example is Regula Face SDK, a cross-platform biometric verification solution that can perform:

Advanced facial recognition with liveness detection: The SDK uses precise facial recognition algorithms with active liveness detection to verify users in real time, preventing spoofing through photos or videos.

Face attribute evaluation: The SDK assesses key facial attributes like age, expression, and accessories to improve accuracy and security during identity verification.

Signal source control: The SDK prevents the signal source from being tampered with, making deepfake injection attacks less potent.

Adaptability to various lighting conditions: The SDK operates effectively in almost any ambient light.

1:1 face matching: The SDK matches the user’s live facial image to their ID document or database entry, verifying identity at a 1:1 level.

1:N face recognition: The SDK scans and compares the user’s facial data against a database, identifying them from multiple entries at once (1:N).

Have any questions? Don’t hesitate to contact us, and we will tell you more about what Regula Face SDK has to offer.