Biometric authentication is seeing more and more widespread use with each passing year, and it’s not hard to see why. Security measures like liveness detection are considered both reliable and non-intrusive for users, as long as a powerful enough solution is implemented.

At the same time, a less sophisticated system can be vulnerable to presentation attacks—unauthorized penetration attempts with the help of stolen face images or replicas. What’s more, presentation attacks are not hard to execute, as some of their simpler forms only take a photo or a tablet screen with a user’s face. And nowadays, many people’s faces are constantly exposed—on social media, in surveillance footage, and in professional records.

In this article, we’ll take a look at several known types of presentation attacks and explore what makes them a threat.

Get posts like this in your inbox with the bi-weekly Regula Blog Digest!

What are presentation attacks?

A presentation attack is an attempt to mislead a biometric authentication system with the help of tools that mimic the appearance of a legitimate user.

The ISO/IEC 30107-3 standard on biometric presentation attack detection identifies at least nine vulnerable stages in the identity verification process where attacks could occur. When aligned with the above key verification stages, we get the following map of potential attack points:

.webp)

Presentation attacks happen at the very first moment of the interaction with the system when data is being collected. It is the easiest-to-perform attack type and, thus, the most common. Luckily, these attacks can be prevented with the help of an advanced presentation attack detection (PAD) system that supports liveness detection functionality (more on that later).

Now, let’s dive into the common types of presentation attacks and see how they may look.

Presentation attack types

Fraudsters can be very inventive in their methods of bypassing even the most sophisticated security, which lends to the impressive length of our list. In this list, we’ve aimed to go beyond the basic classification, and categorize a wide range of presentation attacks.

Basic printed photo attack

This is the most rudimentary presentation attack, yet it still works against outdated or poorly configured biometric systems. The attacker presents a printed face image of the legitimate user to the authentication camera, hoping to trigger a match. If the system lacks liveness detection, a high-quality print may be enough to pass.

Cut-out photo mask attack

A slight evolution of the basic print attack, this method involves modifying the printed face image by cutting out holes for the eyes and mouth. The goal is to have basic interaction with the camera through blinking or lip movement, giving the illusion of a live subject.

Layered print attack

Instead of using a single printed face image, attackers print multiple layers, cutting and stacking sections to create an artificial depth effect. They slightly raise the nose or cheeks to fool systems that rely on depth perception for their attack detection.

3D film print attack

This method uses face images printed on flexible 3D film materials, often translucent or semi-rigid, to create a contoured surface that mimics the curvature of a real human face. These films can be even wrapped around a support structure to introduce facial depth and partial texture cues. The reflectivity and surface scattering of 3D films can closely simulate skin under certain lighting conditions, especially under office or indoor light sources.

Partial face mask attack

Partial face mask attacks use rigid or flexible material shaped to only cover key areas of the face—typically the eyes, nose bridge, or lower jaw—depending on what parts the biometric sample requires for recognition. These masks may be combined with makeup, adhesive prosthetics, or occlusion (e.g., a hand or glasses) to create just enough similarity with a target individual.

Nylon stocking mask attack

These attacks rely on wearing a thin nylon mask—typically the kind used in disguise or theft—to partially blur, compress, or visually smooth out facial features. While primitive, nylon masks can distort key facial landmarks in a way that either anonymizes the attacker or causes biometric systems to falsely accept them as another person in the database.

Silicone and latex mask attack

Silicone and latex masks are known to provide realistic texture, flexibility, and even minor movement when worn. That’s why more and more often attackers trying to fool facial recognition technology produce custom-made masks that closely match the target’s physical characteristics.

3D-printed model attack

Attackers can now also fabricate realistic facial models with consumer-grade 3D printers—the model is either worn like a mask or presented on a stand to the camera. These prints are often painted to simulate skin tones and may include false eyelashes, hair, or contact lenses to simulate eye texture. What makes this method especially dangerous is that 3D-printed models can be made hollow or mounted on rigs, allowing controlled head movements during authentication.

Mannequin head attack

For this method, attackers add wigs, makeup, and printed facial textures onto mannequins to create an eerily accurate representation of their target. Some mannequin heads even include mechanical components that allow slight facial movement.

Photo replay on-screen attack

Rather than printing an image, some attackers simply display a high-quality face image on a screen (e.g., a tablet or phone) and present it to the camera. If the system only analyzes static features, this attack can be successful.

Video replay attack

This is one of the most widely used presentation attack s—fraudsters simply play a pre-recorded video of the legitimate user’s face on a screen. This method stands a decent chance against systems that lack liveness detection because the video usually has natural head movements and blinking.

Deepfake presentation attack

.webp)

A deepfake presentation attack is way more technologically advanced than the others. Instead of playing a video, attackers use AI models to generate synthetic videos in real time. This way, they can dynamically manipulate a face, adjusting its expressions and blinking patterns. A high-quality deepfake presentation can be so indistinguishable from a real subject that even humans sometimes struggle to tell the difference.

How to choose a presentation attack detection system?

Presentation attack detection (PAD) is a countermeasure that determines whether a biometric sample comes from a legitimate user or a deceptive attack. PAD operates on the principle that biometric systems should not just recognize an individual’s physical characteristics, but also validate that those characteristics belong to a living human (liveness detection).

There are two notable liveness detection methods that PAD systems employ—active and passive PAD:

Active PAD compels a user to follow an on-screen prompt and perform a certain action (e.g., turning their head) in order to verify their presence. That is important because a pre-recorded video or a printed face image cannot react to these kinds of instructions, making it obvious that the input is fraudulent.

Passive PAD, on the other hand, does not require the user to do anything specific. Instead, the system analyzes the physical characteristics of a face, its texture consistency, and microexpressions—things that can’t be easily faked.

Active PAD is considered the more reliable of the two because it involves a more sophisticated procedure, while passive PAD is less intrusive for users. A well-designed PAD system supports both to provide the business with greater flexibility.

There are also other indicators of a reliable PAD system, one of the main ones being compliance with the ISO/IEC 30107-3 standard. This standard sets very high benchmarks for failure rates and can only be achieved after rigorous independent testing by an authorized organization.

Preventing presentation attacks with Regula’s technology

Presentation attacks may be getting more and more sophisticated, but so are the tools for countering them. At Regula, we continuously refine our flagship solution, Regula Face SDK, to perform the most secure ID verification and face biometrics possible.

Our extensive collection of presentation attack tools allows us to perform rigorous testing and steady improvement of our liveness detection system.

At Regula's lab, we are always testing new ways in which presentation attack detection systems can be tricked.

On top of being compliant with ISO/IEC 30107-3, our cross-platform biometric verification solution features:

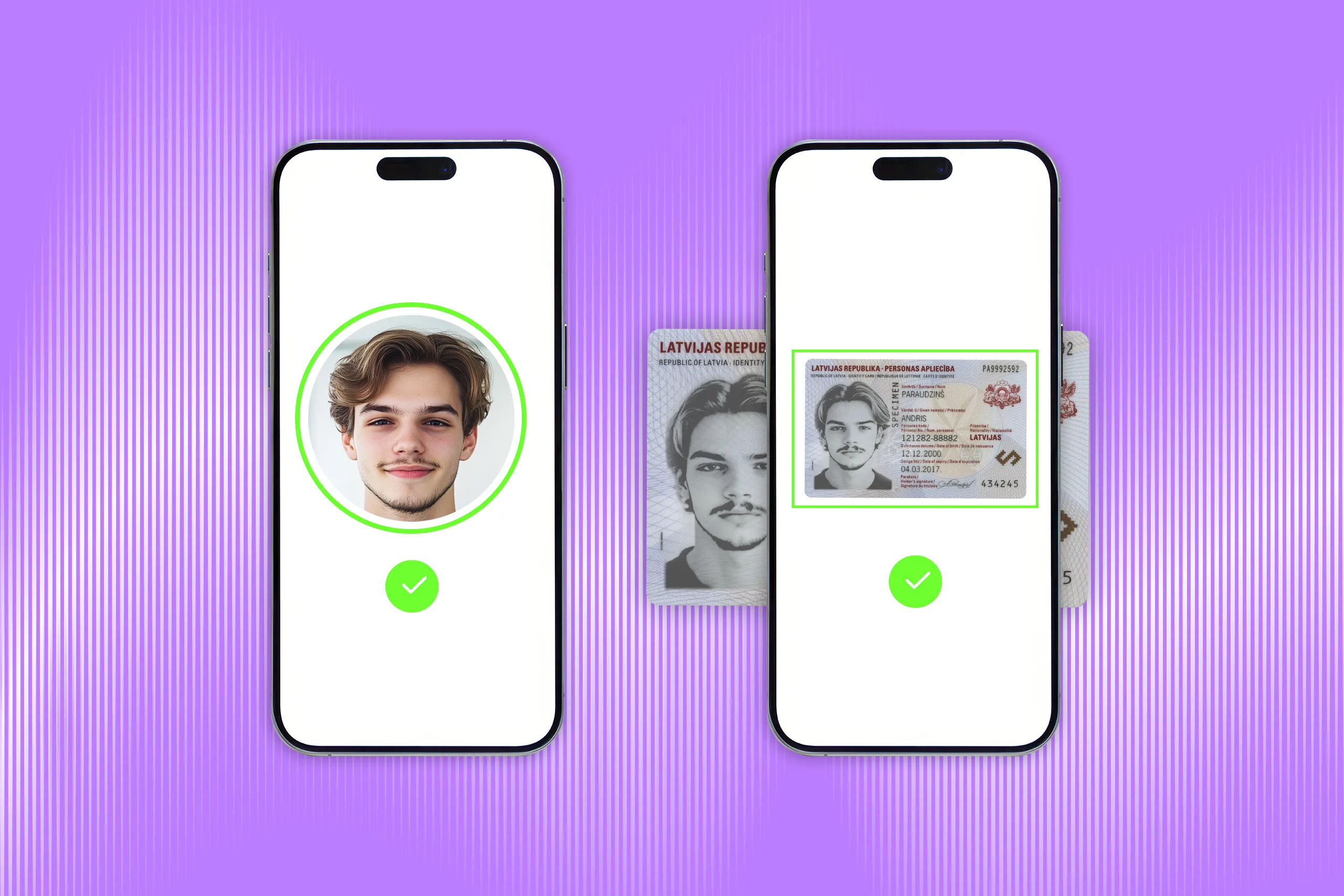

Advanced facial recognition with liveness detection: The SDK uses precise facial recognition algorithms with active liveness detection to verify users in real time, preventing spoofing through photos or videos.

Face attribute evaluation: The SDK assesses key facial attributes like age, expression, and accessories to improve accuracy and security during identity verification.

Signal source control: The SDK prevents the signal source from being tampered with, making deepfake injection attacks impossible.

Adaptability to various lighting conditions: The SDK operates effectively in almost any ambient light.

1:1 face matching: The SDK matches the user’s live facial image to their ID document or database entry, verifying identity at a 1:1 level.

1:N face recognition: The SDK scans and compares the user’s facial data against a database, identifying them from multiple entries at once (1:N).

Have any questions? Don’t hesitate to contact us and we will tell you more about what Regula Face SDK has to offer.