There is no doubt that AI is changing identity verification just like it’s changing the rest of the world. Governments and businesses worldwide are using AI in their identity verification systems more and more often, not only for efficiency, but also for effectiveness. At the same time, criminals have also ramped up their AI-related scams, creating the infamous deepfakes or forging new identities altogether.

In this article, we’ll examine both sides of AI in identity verification: how exactly AI helps verify individuals (through biometrics, liveness, and fraud analytics) and how AI can also hamper the process (via deepfake fraud and synthetic IDs). We will also give our opinion on what the future holds for the industry and what can be done to make sure the bright side wins.

The bright side: How AI helps identity verification

In recent years, we have seen AI technologies dramatically improve the security, accuracy, and efficiency of identity verification processes. As long as you don’t use ChatGPT to authenticate important ID documents, you can benefit from AI in more ways than one:

Biometric matching

Nowadays, identity verification heavily relies on biometrics like facial, fingerprint, and voice recognition. These are the areas where AI has vastly improved accuracy, thanks to its ability to map a user’s facial features and compare them against ID documents or stored templates.

The U.S. Department of Homeland Security notes that face recognition and capture are “powerful AI technologies,” which they themselves utilize to great success, with 14 distinct uses and 97% success rate at the lowest.

Meanwhile, in Europe, the EU AI Act came into force on 1 August 2024—its primary goal is to protect EU businesses and customers from AI misuse. It will become generally applicable by August 2026, with intermediate stages providing companies with additional guidelines to prepare their systems for complete compliance by August 2027. According to this law, many IDV-related applications—such as CV-sorting software and border control management—are classified as high-risk.

To comply, organizations must:

Implement a risk assessment and security framework, including logging all system activities and preparing detailed documentation for regulatory review.

Use high-quality datasets to train NNs and minimize biased outcomes.

Ensure human oversight of AI-based identity verification systems.

Liveness detection

One of the most common ways to fool a face matching system is to try to use a photo or video of someone else. It would be an extremely potent method if it wasn’t for liveness detection.

Liveness detection makes sure that an actual, live person is present during biometric verification through analyzing subtle cues like blinking, facial texture, 3D depth, or motion. These physical indicators of liveness are extremely hard to spoof with a photograph or pre-recorded video. Many online identity verification services now integrate such tests into their enrollment flows (for instance, asking users to turn their head).

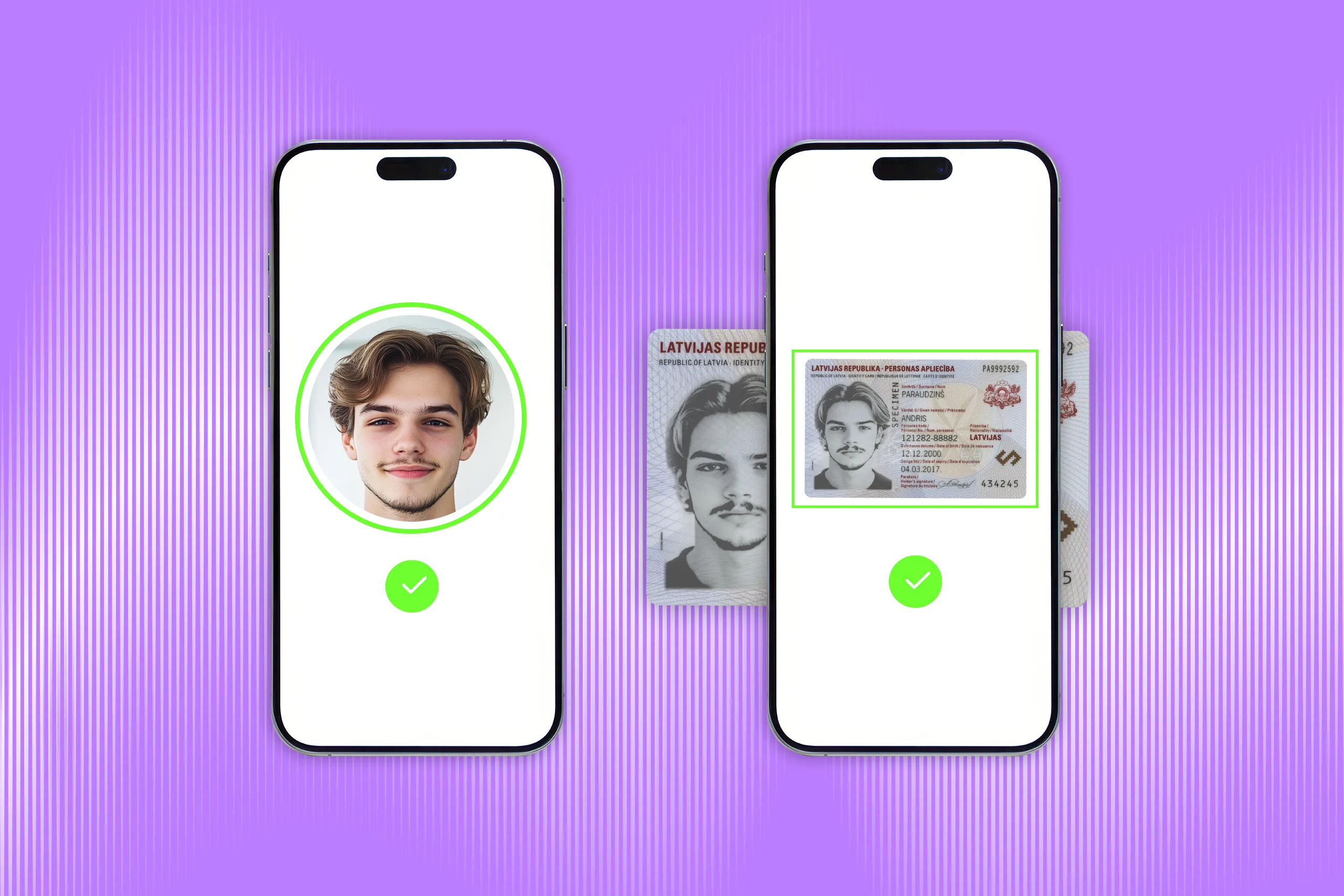

Automated document verification

As opposed to lengthy and far more imperfect human reviews, AI neural networks and vision systems can automatically inspect many types of ID documents such as passports, national identity cards, and driver’s licenses, among others.

The models are trained to spot a number of security features (e.g., OVI ink, holograms, etc.) and extract text from IDs for a later cross-check with the help of Optical Character Recognition (OCR). Any anomalies are referenced with a library of sometimes thousands of ID document templates to catch any subtle signs of tampering.

Subscribe to receive a bi-weekly blog digest from Regula

The dark side: How AI hampers identity verification

At the same time, there exists a completely different side to the AI story. While it has undoubtedly bolstered many defenses, it has also armed fraudsters with new tools to undermine those same defenses. Malicious actors are using AI algorithms to impersonate others, create fake identities, or fool biometric checks, sometimes with alarming success.

Deepfake identity fraud

Perhaps the most disruptive threat is the rise of deepfakes—AI-generated fake videos, images, or audio that mimic real people. In some cases, they can be sophisticated enough to pass live ID verification; impostors can use digital puppets to look like the victim’s face, or use an AI-cloned voice for their identity fraud. This has already been proven by multiple industry reports from 2024, with one stating that “biometric authentication systems using face or voice recognition have already been compromised by deepfake technology in several critical cases.”

One striking incident occurred in early 2024: criminals created a deepfake of a company’s CFO and other employees to trick a finance officer. In a video conference, the staff member saw what appeared to be their CFO’s face giving instructions. Believing it was genuine, they subsequently wired $25 million to the attackers’ accounts before the scam was uncovered.

Synthetic identity fraud

Another fast-growing form of financial crime is synthetic identity fraud, where criminals create a completely fake person by blending real data (e.g., a valid social security number) with fabricated details. Historically, synthetics were hard to detect because they don’t correspond to one real victim (so victims don’t report it) and they can “age” a fake credit profile slowly to escape notice.

Now, AI makes it even easier as fraudsters can produce unique profile photos for their synthetic personas, so reverse image searches won’t reveal a stock photo. On top of that, they may even use AI to generate fake supporting documents.

Our own recent survey found that about 49% of U.S. businesses and 51% of UAE businesses are already struggling with synthetic IDs being used to apply for services. Even more disturbingly, investigative journalists have shown how easy (and cheap) it is to obtain high-quality fake credentials. In one 2024 test, a researcher generated a fictitious driver’s license through an underground AI service using his photo and fake personal info for just $15 (!). The system produced a hyper-realistic ID image with a matching signature and details, which he submitted to a crypto exchange’s KYC verification—and it passed automated review, allowing him to open an account.

False positives

This one is not a malicious attempt at identity fraud, but an unwanted consequence of a system not being perfect—false positives where legitimate users are flagged as fraud. This can be caused by changes in lighting, aging of an ID photo, or even an algorithm’s odd pattern detection. Each mistaken rejection understandably frustrates users and thus forces companies to fall back to human oversight to avoid over-reliance on automation. To improve accuracy and minimize errors, organizations can implement a RAG evaluation, which helps systematically assess risks, flag potential issues, and prioritize corrective actions in automated systems.

AI neural networks can also sometimes fail in ways that may even feel biased. If a model hasn’t been trained on a diverse dataset, it might struggle to recognize faces of certain demographics, leading to higher false rejection rates for those groups. For instance, in the UK, an UberEats courier was unfairly terminated after the AI repeatedly failed to verify his face. He was told there were “continued mismatches” with his selfies and was removed from the platform, prompting a discrimination lawsuit that led to a payout.

Which side is winning?

In our opinion, right now, most deepfakes are still detectable—either by eagle-eyed humans or AI-powered identity verification solutions that have been around for some time. That said, deepfake threats are evolving very quickly, and we are already on the edge of witnessing highly convincing samples that can scarcely arouse any suspicion.

So the priority should be on “outtraining the good AI”—continually reshaping it with more and more data on fakes. Teams should be on the lookout for anything fishy or inconsistent while doing ID liveness checks, capturing new data samples, and feeding them to the system.

Fortunately, current AI-generated identity fraud still has some blind spots as well; some deepfakes, for instance, often fail to capture shadows correctly and have odd backgrounds. Fake documents also typically lack dynamic security features, and they fail to project specific images at certain angles. Another key challenge that criminals face is that many AI models are primarily trained using static face images, mainly because they are more readily available online. These models struggle to deliver realism in liveness 3D video sessions, where individuals need to turn their heads.

Moreover, modern IDs often incorporate dynamic security features that are visible only when the documents are in motion. The industry is constantly innovating in this area, making it nearly impossible to create convincing fake documents that can pass a capture session with liveness validation, in which the documents must be rotated at different angles. Hence, requiring physical IDs for a liveness check can significantly boost an organization's security.

Businesses can also combat deepfakes by taking full control of the signal source. Some native mobile platforms do not allow tampering with the video stream, which is a massive help. There is always multi-factor authentication, too—an individual can be identified not only by their ID or biometrics, but also by a number of other means (e.g., address, phone number, database checks, other personal info).

Ultimately, it's a constant cat-and-mouse game with fraudsters, and the results are often unpredictable. But, for now, it feels like the bright side might just have the upper hand.

Making ID verification secure

Your ID verification process can greatly benefit from robust software solutions that make it not only secure, but also user-friendly and compliant. For instance, ID verification and face biometrics with liveness checks can be carried out by solutions like Regula Document Reader SDK and Regula Face SDK, respectively.

Document Reader SDK processes images of documents and verifies their real presence (liveness) and authenticity, beating the vast majority of fraudulent AI use. The software identifies the document type, extracts all the necessary information, and confirms whether the document is genuine.

At the same time, Regula Face SDK conducts instant facial recognition and prevents AI-powered presentation attacks with advanced liveness detection and face attribute evaluation.