Age verification is moving from a niche compliance requirement to a core platform function. In 2024–2026, governments across Australia, Europe, and the US are shifting from voluntary guidance to enforceable mandates, with fines, audits, and access blocking. As a result, companies are being forced to rethink not just whether to verify age, but how to do it without breaking privacy, UX, or trust.

Chapter 1:

What is age verification? A brief overview

Age verification is a set of technical and organizational measures used to confirm whether a person meets a legally defined age threshold before accessing restricted goods, services, or content.

In practice, companies use a variety of methods to confirm customers’ age — either online or on-site. Online, users typically verify their age themselves — whether by submitting a selfie, uploading an ID, or interacting with a human inspector during a video call. On-site checks are usually performed manually by a staff member like a bank manager, cashier, or casino guard.

Age verification is subject to both global and local regulations, which vary widely across countries and regions. These rules determine which businesses must perform age checks, and what methods are legally acceptable.

Learn more:

Chapter 2:

Main age verification concepts & definitions

As a technology, business practice, and regulatory requirement, age verification involves a wide range of terms and approaches.

Age gating

This method is commonly used by digital platforms to block access to age-restricted content through self-confirmation pop-ups. When visiting a website, users are asked to confirm if they are over a certain age.

Age gating relies on self-declaration, with no age proof required, making it the most insecure age verification method.

Typically, age gates rely on yes/no answers without further validation. As a result, this is the least restrictive and least reliable method. However, many online shops selling alcohol, tobacco, fireworks, pharmaceuticals, and other restricted goods continue to use it.

Age verification

While age verification is a general term covering all age confirmation methods, it’s often used to describe more reliable approaches — such as submitting a government-issued photo ID to confirm a user’s date of birth. Face matching can also be applied to ensure the person in the selfie matches the ID photo.

Some countries, such as the US and Australia, issue domestic IDs for youth using specific layouts or designs (for example, the portrait-oriented driver’s license from Iowa) or distinct color schemes (as seen on Queensland’s photo ID card).

Age estimation

This method uses biometric data — typically, a live selfie presented during the process. Facial analysis algorithms automatically assess whether the person meets the age requirement. There are several approaches:

Estimating the user’s age based on facial features.

Comparing the selfie to a dataset of faces at different ages.

Verifying whether the person meets a specific age threshold: e.g., over 13, 16, 18, or 21.

Like ID-based methods, age estimation is considered a strong and compliant way to detect and block underaged users.

Age assurance

Unlike the methods above, age assurance is a framework rather than a specific technique. It’s a legal rule in many jurisdictions, requiring businesses to confirm that users meet minimum age criteria before accessing certain services or content. Since age restrictions vary globally, so do the methods allowed.

Zero-knowledge age proof

This privacy-preserving method relies on verifiable credentials and cryptography. Once a user registers, they can present cryptographically signed attestations of age, citizenship, or residency across services without revealing extra personal details. In this case, companies trust the attestation system itself and don’t ask for any additional proof like selfies or IDs.

While this concept is privacy-friendly and promising, it remains limited in theory and real-world adoption.

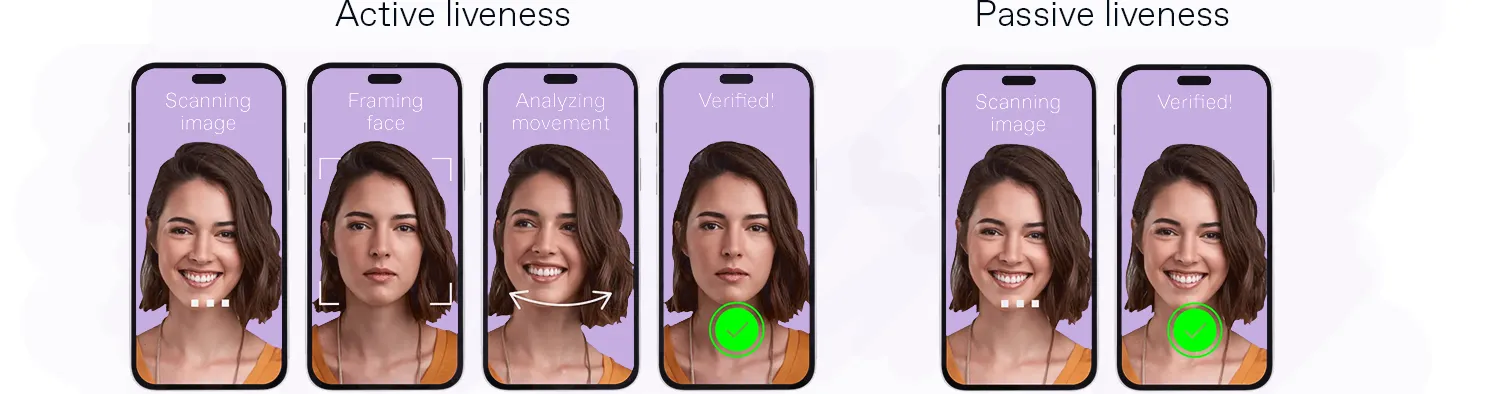

Liveness in age checks

While not a standalone method, liveness detection is essential for digital age and identity verification. It ensures that the selfie provided belongs to a real, live person, not a deepfake, printout, or screen image. This is done automatically using neural networks trained to detect facial movement and depth.

There are three main types of liveness detection:

Passive: A single image is analyzed.

Active: A short video showing movement (smiles, nods, etc.) is analyzed.

Hybrid: Combines a selfie with a random action for analysis.

Parental consent mechanisms

Used for verifying minors, this method involves a parent or guardian confirming a child’s access to age-restricted content or services. Unlike age estimation or ID verification, parental consent doesn’t determine age. It simply attests that a minor is allowed access, usually triggered by an age-related question or form.

These mechanisms are legally recognized in many countries and are often built into broader age assurance frameworks.

Learn more:

Explaining Levels of Age Verification: From Age Gating to Age Assurance

What Is Liveness Detection, and How Does It Help to Address Online Authentication Challenges?

Age Gating vs. Age Verification: Choosing the Right Approach

What Is Face Matching in ID Verification? A Quick Explanation

Chapter 3:

Global age verification landscape

When it comes to age verification laws, the global picture is uneven. Some countries follow centralized approaches with clear rules for different sectors, while others offer only general guidelines or lack regulation entirely.

Here is a brief overview of the legal landscape by region:

💡Disclaimer! The content provided in this article is for informational purposes only and does not constitute legal advice or a legal opinion.

Africa

In much of Africa, regulation exists more in principle than in operational detail. Most countries lack explicit online age verification laws and rely instead on general child protection frameworks.

One exception is South Africa, where providers of adult-only content, such as films, videos, and games, must use robust age verification methods to confirm users are 18 or older (Films and Publications Amendment Regulations, 2022). Elsewhere, checks for social media, gaming, or e-commerce remain undefined.

Enforcement across the continent is low and inconsistent. Regulators tend to focus on content blocking rather than age verification. For this reason, there have been no major public penalty cases to date.

Asia

Asia also shows wide variation in age verification laws. In many countries, it’s unclear how platforms should verify age or how strictly the rules are enforced. However, some markets have adopted clear and mature frameworks.

China, South Korea, and Japan mandate strong age and identity checks, often requiring government-issued IDs, especially for online gaming, adult content, and digital payments. China also enforces real-name verification for telecom and online services.

In India, age-related laws vary by state. For instance, online gaming is banned in Karnataka, while in Tamil Nadu, providers must verify users and block minors from accessing such platforms (Real Money Games Regulations, 2025).

Overall, enforcement ranges, from aggressive in jurisdictions like China, to weak or fragmented elsewhere.

Stay Tuned!

We'll deliver hand-picked content from Regula's experts into your inboxAustralia and Oceania

Australia is a global leader in mandatory age verification. From December 10, 2025, under-16s have been banned from social media platforms like Facebook, Instagram, Kick, Reddit, and Snapchat. Platforms must verify users’ ages or face severe fines (up to AUD 49.5 million). Under the eSafety framework, adult-only services must also implement age assurance by March 9, 2026.

New Zealand is considering similar rules, though legislation has not yet passed. Other countries in the region show less consistency in this area.

Europe

European countries largely focus on shielding minors from harmful and adult content, and the number of states adopting these policies continues to grow. This applies not only to gambling and e-commerce platforms, but also to social media.

Some states have moved beyond basic age gating (“Are you over 18?”) and now require robust age verification. For instance, France and Germany mandate strict checks for adult content and block non-compliant sites. There are also moves toward social media bans for children in Spain, Denmark, Italy, Greece, and Finland. Many of these plans rely on stronger age verification, often linked to national or EU digital ID systems.

The UK’s Online Safety Act expands age verification requirements to cover social media and platforms hosting age-restricted content. In addition, the British Standards Institution (BSI) has recently pointed to a new international standard, ISO/IEC 27566-1, to help organizations design age-assurance systems that protect privacy and personal data. The framework focuses on how to check a user’s age without collecting more data than necessary.

Penalties are increasing across the region — for instance, UK fines can reach up to 10% of global revenue — and may include audits and site blocking. At the regional level, legislation such as GDPR also applies, holding companies responsible for unlawful data collection or weak safeguards, with fines of up to 4% of global annual turnover.

Latin America

Many countries in this region are shifting from minimal regulation like age self-declaration to stronger enforcement for digital platforms that minors can access. While comprehensive online age verification laws remain rare outside sectors like gambling and alcohol, some countries are setting clear frameworks.

For instance, Brazil’s Digital ECA statute, introduced in 2025, requires platforms to use reliable age verification methods and prohibits vague, easily bypassed checks. It also promotes the use of parental control tools. Plus, there are penalties such as fines of up to 10% of a company’s revenue in Brazil.

Another example is Argentina: since 2024, online gambling operators must verify users through biometric ID checks. This includes validation against the national ID database (RENAPER) and face verification for every login.

Middle East & North Africa (MENA)

MENA countries have historically focused on content bans rather than online age verification, blocking adult-only sites outright instead of verifying user age.

The UAE is an exception. A new child digital safety law, effective January 2026, requires all platforms operating in the country to verify user age and apply parental controls (via verified consent) for users under 13. This applies to global platforms such as TikTok, Twitch, and Roblox.

Elsewhere in the region, legal requirements around social media and gaming remain vague. Enforcement is often binary: comply or get blocked. So far, penalties have usually been tied to content violations, not age-check failures — though this may change as laws like the UAE’s gain traction.

North America

The region is transitioning from self-regulation to legal mandates in online age verification.

In the US, policies vary by state. Louisiana, Utah, Texas, Arkansas, and Virginia now require robust age verification, often via government ID or third-party age assurance providers, for adult content sites. While there’s no unified federal standard, laws like COPPA impose strict controls on data collection from users under 13, indirectly driving broader age verification practices.

Canada is moving toward a centralized model with the development of a children’s privacy framework that requires platforms to prevent minors from accessing harmful content using age assurance measures.

Enforcement across the region is strong, involving lawsuits, audits, and civil penalties. However, a lack of standardized technical approaches, as well as ongoing privacy and free-speech debates, leave many questions unresolved.

Learn more:

Kids Are Cheating Roblox with Fake Mustaches — Can’t Identity Verification Stop Them?

KYC Requirements in Australia: What You Need To Know in 2026

Chapter 4:

How does age verification work?

At its core, age verification is about collecting proof of age and analyzing it. Many companies now rely on selfies, IDs, or a combination of both. Let’s look at how these scenarios typically work.

Data inputs

The process always starts with the user submitting data like a photo, video, or ID. The system then evaluates the input quality and captures the necessary data using tools like OCR (to extract text like the date of birth), image quality assessment, and facial attribute analysis (detecting glasses, beards, masks, etc.).

To ensure reliable results, inputs must be well-lit, in focus, properly positioned, and free of glare and obstructions.

The system must also confirm that the data is real, not AI-generated or manipulated. For instance, video injection attacks can be used to spoof the process, but they are typically caught by advanced liveness checks. Similarly, ID liveness detection can confirm that the document is genuine.

Accuracy limits and error rates

Most age verification steps are automated and run by neural network algorithms. Their performance depends on several behind-the-scenes factors: training dataset quality, similarity rates, age thresholds, and even app usability.

To avoid errors and false results, human oversight should always be available as a fallback. Just as important, companies must test age verification systems on real-world user data to evaluate accuracy.

Bias and demographic challenges

Automated age verification checks can produce errors, which are often due to bias in the datasets used for system training and calibration.

For example, datasets may reflect only certain demographics (age groups, genders, nationalities) or image types (studio-quality shots vs. mobile camera photos). These imbalances can lead to inaccurate results.

To avoid this, companies should fine-tune age verification solutions based on real users and key variables like similarity rates and age thresholds.

Privacy-by-design approaches

When personal data and biometrics are involved, users expect strong privacy safeguards. That’s why privacy-by-design is essential, embedding privacy into the system from the start.

Best practices include minimizing data collection, informing users, and ensuring data protection throughout the lifecycle. For instance, some companies delete user data immediately after verification. Others store only digital descriptors of biometric data: non-reversible hashes that protect privacy even if breached.

On-device vs. server-side checks

Where the data is processed also matters for both privacy and security. Many businesses now prefer mobile-first, on-device age verification solutions for convenience. However, on-device checks are more vulnerable to presentation attacks and manipulation.

For this reason, some organizations add server-side verification as a second layer. In this setup, the mobile data is rechecked in a secure environment to catch spoofing or injection attempts before final approval.

Learn more:

Video Injection Attacks in Remote IDV: Scenarios & Solutions

Presentation Attacks: What Liveness Detection Systems Protect From Every Day

Are Businesses Overtrusting Biometric IDV? An Expert Opinion

Chapter 5:

Risks & ethics of age verification

Age verification is used to support informed decisions about access to specific services and goods (for instance, to assess a loan applicant’s risk profile or to protect minors from inappropriate content). However, if poorly implemented, it can compromise user privacy. The ethical goal for businesses is clear: confirm a customer’s age using only the necessary data.

Over-collection of personal data

Age checks often overlap with broader identity verification processes. That can lead to collecting far more than just a date of birth or facial image: full ID details, IP addresses, and behavioral signals can also be collected and analyzed. In most cases, this exceeds what’s needed to answer a simple question: “Is this user above the required age?” Over-collection increases the impact of potential data breaches and complicates compliance obligations.

💡Solution: Take a customer-centric approach. Trigger age checks only when needed based on legal requirements or risk levels, and treat them as distinct from full ID verification where possible.

Function creep

In most cases, users simply want access to age-restricted content. But sometimes, they’re forced into full identification because of function creep — where a system designed for one purpose ends up being used for others. This can ruin user trust and threaten anonymous access, particularly for low-risk services.

💡Solution: Define and follow strict technical and consent boundaries. Don’t reuse age-verification data for unrelated purposes like advertising, profiling, or risk scoring.

False positives and exclusion risk

Automated systems aren’t perfect. False positives (letting in underage users) and negatives (blocking legitimate adults) can both occur. These mistakes might happen due to poor lighting, expired documents, or demographic bias in face-based models, leading to exclusion or exposure risks.

💡Solution: Calibrate systems for your user base, offer multiple options for different risk levels, and include human-in-the-loop review for edge cases.

Protecting children’s data

Age verification systems often collect highly sensitive data from minors — IDs, face scans, even parental information. If this data is stored insecurely, it becomes a prime target for fraud and identity theft, putting children at risk and damaging companies’ reputations.

💡Solution: Obtain verified parental consent where applicable, minimize the amount of data collected, and apply strict retention limits.

Auditability and transparency

Age verification systems should not be black boxes. Users need to know what data is collected and why. Regulators need visibility into how systems function, including security protocols, data residency, retention periods, and error handling.

💡Solution: Use clear terms and explanations for users. Conduct regular independent audits, privacy reviews, and fairness assessments. Keep depersonalized logs to document the checks performed and the reasons for any failures.

Learn more:

Chapter 6:

Expert voices on age verification

Why accuracy depends on technology and data quality

Ihar Kliashchou, Chief Technology Officer at Regula:

— When it comes to age estimation, technology quality is critical. Systems designed mainly to reduce friction or speed up onboarding often struggle to balance usability with robustness. In practice, this can lead to thresholds that are more permissive than intended.

Even the strongest system won’t perform well if the input data is poor or if the age assessment models aren’t properly trained. For example, accuracy drops significantly when users squint, turn their face away, wear glasses that obscure facial features, or use masks. Clear, well-lit, unobstructed images, with the user looking straight into the frame, are essential — and it’s the business’s responsibility to design a guided process that helps customers capture them correctly the first time.

Industry benchmarks, such as evaluations conducted by NIST, play a key role here. They test systems across diverse scenarios and help vendors fine-tune solutions for both online and on-site age checks.

One more challenge is emerging. Until recently, age verification focused on separating adults from minors, mainly within the 17–25 range. Now, the task is identifying users under 13, where existing models are far less accurate. Children develop differently across genders and ethnic groups, and high-quality training data for this age range is limited, making precise estimation much harder.

What the core technical limits of age verification are

Nikita Dunets, Deputy Director of Digital Identity Verification at Regula:

— The biggest challenge in age verification is the margin of error. If someone is between 30 and 33, the estimate is usually reliable. But when the difference is between 16 and 18, even small inaccuracies matter.

From a technical standpoint, systems can only produce probability-based estimates. The real difficulty lies in interpreting those probabilities correctly. To improve results, we need a new generation of AI-trained neural networks and clearer guidelines on how to translate probability scores into reliable decisions.

Why regulation, context, and human behavior still matter

Andrey Terekhin, Head of Product at Regula:

— Age verification exists to prevent minors from accessing restricted goods and services, which makes it heavily regulated. But there’s a contradiction: high-quality systems require data to train algorithms, while regulation often limits access to that data. At the same time, low-quality age verification solutions also create regulatory risk.

Context matters too. Online, a child may use a parent’s passport. Offline, they may persuade an adult to buy for them. Vending machines selling age-restricted products — especially in poorly lit areas — are another weak spot where systems are easy to bypass.

Solving these issues takes more than technology. It requires a broad approach that combines better tools with education. Many children see buying adult products as a sign of maturity, but changing that mindset is just as important as improving the checks themselves.

Can stronger age checks still respect user privacy?

Henry Patishman, Executive Vice President of Identity Verification Solutions at Regula:

— Australia is emerging as one of the most important early adopters of age verification regulation. Its reforms go beyond principle-based guidance and introduce enforceable technical standards that make age assurance a core platform function — not just a compliance checkbox.

By requiring platforms to verify under-16 users and obtain parental consent for older teens, Australia is moving from soft nudges to hard access controls. Age checks are now deeply embedded into platform architecture, spanning account creation, fraud prevention, and trust-and-safety operations.

This shift shows that protection doesn’t have to come at the expense of privacy or user experience. With strong Digital ID infrastructure and interoperable standards, Australia’s model could shape how age assurance is implemented globally.

Chapter 7:

Data, studies & benchmarks

Age verification is now a priority not only for regulators and solution providers but also for businesses and end users. As legal requirements expand into traditionally unregulated sectors like social media, technology providers are pushing to improve accuracy, usability, and compliance.

Independent labs such as NIST and iBeta play a key role in evaluating these systems through standardized benchmarks and testing programs. Together, these efforts help shape best practices and raise the bar for the industry.

Below are key resources to deepen your understanding of where age verification technology stands today — and where it’s headed:

Read also:

Watch free webinars:

Biometrics Under Attack: Inside the Real Fight Against Fraud

The Future of Fakes: Deepfakes and the Next Digital Challenge

Download free reports:

Chapter 8:

How age verification can be implemented

Age verification systems vary deeply in functionality, purpose, and tech stack. However, most implementations follow the same core principles to ensure they’re accurate, compliant, and user-friendly.

1. Define the purpose

Implementation starts by clearly identifying why age verification is needed. Different use cases like accessing restricted content, purchasing regulated goods, creating accounts, or meeting platform compliance require different levels of assurance. Defining the purpose helps determine the right balance between accuracy, user friction, and method (e.g., age estimation, document-based verification, or a hybrid model).

💡Pro tip: You can use an age verification risk-tier model based on factors such as the harm level from underage access (the nature of the content), age threshold, incentive for underage users to bypass controls, audience scale, and regulatory exposure.

| Age verification risk-tier model | |||

|---|---|---|---|

Risk factor | Low | Medium | High |

| Harm level | Mild, low-harm content; exploratory access | Mature content on community platforms; limited financial impact | Adult content, gambling, alcohol, tobacco, self-harm exposure, social media access at 16–18; significant legal harm |

| Age threshold | Under 16 | 16–17 | 18 or older |

| Likelihood of underage bypass attempts | Low | Moderate | High |

| Audience scale | Small | Mid-sized (tens or hundreds of thousands) | Mass-market platforms |

| Regulator exposure | Guidance only | Moderate risk (defined obligations, limited enforcement) | Strict enforcement (large fines, child-safety focus) |

This model works well with a decision tree approach, where a structured, step-by-step logic map shows how a company arrives at a specific age-verification method based on risk factors.

Example: If the harm from underage access is low, the age threshold is below 16, and the consequences are limited, the use case can be considered low risk. In practice, this can be addressed through parental controls or even self-declaration. By contrast, adult content — where the incentive to bypass age checks is high and enforcement is active — typically requires strong age assurance with a clear audit trail.

Although this risk-tier model isn’t a ready-made methodology or a legal requirement, it relies on established standards and guidelines. For example, the UK ICO’s guidance recommends applying a risk-based approach and considering the audience's age range and different needs when implementing age-appropriate measures for various services.

The same logic appears in broader digital identity guidance, such as NIST SP 800-63. It encourages organizations to collect only what’s needed — for example, confirming that someone is over a certain age instead of collecting their full date of birth — and to apply different levels of assurance depending on the risk involved during enrollment.

2. Choose the right technology

Once the goal is clear, the next step is selecting the appropriate tools. Common technologies include:

ID document scanning with authenticity checks

Biometric verification (e.g., facial recognition and matching, liveness detection)

Database lookups

Facial age estimation

Many companies use third-party APIs to streamline implementation, reduce development effort, and ensure scalability and security.

💡Pro tip: You can apply method selection based on the risk levels defined earlier. In particular, justify the use of biometrics, as this is always an area of regulatory interest.

Typically, biometric-based methods are appropriate only if they are necessary (when non-biometric approaches fail or are insufficient), proportionate (for instance, when risk and age thresholds are high), and properly safeguarded (with strong additional controls in place).

| Age verification method matrix by risk level | ||

|---|---|---|

| Low risk | Medium risk | High risk |

Basic methods with no biometric data involved:

| Methods where biometrics may apply in some cases:

| Strong methods where biometrics are often justified:

|

| Additional controls | ||

| Logging and periodic reviews | Defined error limits, bias review, appeals process | Ongoing monitoring, strong safeguards, clear audit trails |

This matrix is one possible decision-making approach grounded in common sense. But it reflects widely accepted ideas around strong privacy safeguards, clear protections, and more trustworthy ways to manage age-related access online. These ideas are set out in the ISO/IEC 27566-1:2025 standard, which aims to help organizations decide whether someone meets an age requirement without forcing full identity verification in all cases, and in a way users can accept.

A similar approach appears in the European Data Protection Board (EDPB) Statement 1/2025. In particular, the statement emphasizes that organizations should collect only personal data that is necessary, adequate, and relevant for a given purpose. At the same time, an age-assurance system still needs to work accurately and consistently in practice. It should be accessible, reliable, and robust, allowing users to challenge and correct errors, and be strong enough to prevent cheating.

3. Test in real-world conditions

Before scaling up, it’s critical to test the system in real-world scenarios. Evaluate performance across:

Devices (mobile, desktop)

Lighting and environmental conditions

User demographics

Varying network quality

Pilot runs and A/B tests can uncover false rejections, friction points, or edge cases, giving you time to optimize before full deployment.

💡Pro tip: Using NIST-approved assessments as a standard helps strike a balance between age verification process simplicity and high conversion rates, while also managing reliability and risk. For example, in age estimation systems involving minors — such as users aged 17–18 — it is recommended to use a threshold of 25 instead of 18 to improve accuracy, boost conversions, and reduce risk.

4. Ensure legal and regulatory compliance

Your system must meet relevant data protection laws (like GDPR), age-restriction regulations, and privacy standards. Key compliance factors include:

Lawful basis for processing

Data minimization

Secure storage and short retention

Transparency and user rights

As laws evolve, regular audits are needed to ensure continued alignment.

5. Optimize UX and UI

Technology alone isn’t enough — user trust and ease of use matter. Customize the verification flow to match your audience. Explain why age checks are needed and what will (and won’t) happen with their data.

Improvements like progressive disclosure, mobile-first design, fallback options, and localized messaging help reduce drop-offs and boost conversions while staying compliant.

Learn more:

A Critical Step to Take Before Buying an Identity Verification Solution to Maximize Success

How to Customize Mobile UI and Facilitate User Experience in Identity Checks

Bonus:

FAQ on age verification

Is age verification mandatory?

In general, yes, but it strongly depends on the specific jurisdiction. Age verification is legally required for platforms offering access to age-restricted goods, services, or content. The exact rules depend on local laws, industry, and platform type. Even in areas without explicit mandates, regulators increasingly expect platforms to have reasonable age assurance measures in place.

Can platforms store age-related data?

Only with clear legal grounds. Platforms may store age-related data if it complies with data protection principles such as data minimization and purpose limitation. In most cases, storing only the verification result (e.g., pass/fail) is safer than retaining raw personal data. Retention should be limited, and users must be informed about how their data is handled.

Are biometrics legal for age checks?

Yes, but with strict conditions. Biometric technologies like facial recognition or liveness detection can be used for age verification processes in many regions. However, they require explicit user consent, strong security controls, and a justified legal basis. Some jurisdictions impose tighter rules or outright bans, so legal review is essential before rollout.

How accurate are AI age-estimation tools?

Good for some cases, not all. AI age estimation has improved and works well for low- to medium-risk use cases. Accuracy depends on model quality, training data, and factors like lighting and image clarity. In high-risk or regulated scenarios, it’s often combined with more reliable verification methods. Using test results from independent, reputable labs such as NIST also helps assess the technology's real-world performance.

What are the risks if platforms get age checks wrong?

They’re serious. Poor or missing age verification can lead to regulatory fines, lawsuits, service suspensions, and reputational damage. It also erodes user trust and increases long-term business risk. Ensuring proper implementation is not just about compliance — it's essential for credibility and user safety.

Can age verification be done without collecting ID documents?

Yes, it can. Some age verification methods don’t require users to submit an ID. Instead, they use signals like facial analysis, mobile network data, credit card ownership, or government-backed digital IDs. These methods can estimate or confirm a user’s age without revealing full identity details, helping to protect privacy while ensuring compliance.

How can users challenge incorrect age decisions?

Users should be given a clear and simple way to appeal if they believe an age decision was wrong. This usually involves contacting the platform or verification provider and providing additional proof (like an ID or parental consent). Transparency and fast resolution are key — especially when access to essential services or content is blocked.

Who is legally responsible if age verification fails — the platform or the third-party provider?

Typically, the platform is legally responsible. Even if a third-party provider performs the checks, regulators often hold the platform accountable for user safety and compliance. That’s why it’s crucial for platforms to choose trusted providers and ensure the verification process meets legal standards.

What do the mass media often get wrong about age verification?

There are several common misconceptions. Many reports suggest that an age verification process always includes uploading a government ID. Others claim that AI-based age estimation is either illegal or too unreliable to be useful. And it’s often assumed that privacy must be sacrificed to enforce age checks.

In reality, there are risk-based methods that don’t require identity documents. Age estimation can be accurate and legal when used with proper safeguards. And with the right setup, platforms can protect both users and their privacy.